yolov4训练visdrone记录

作者:互联网

准备

参见这篇,不再赘述,

注意labels有没有错误,如w,h为0,重复标注等,在转换代码中加入判断滤除即可。

数据只用了task1的图片集。

配置

anchors

./[darknet](https://github.com/AlexeyAB/darknet) detector calc_anchors data/visdrone.data -num_of_clusters 8 -width 800 -height 800

5, 10, 9, 23, 19, 17, 17, 41, 37, 32, 31, 72, 65, 65, 97,134

显存不够的将网络大小改为416,608等

visdrone.cfg

由于visdrone数据集大部分是中小目标,所以去掉yolov4最后一个yolo层,直接删除第二个yolo层以下即可,更新anchor,

classes改为10,num改为8表示两个scale,每个scale包含4个anchor,相应filters从255改为60((10+5)*4),最后一层配置如下:

[convolutional]

size=1

stride=1

pad=1

filters=60

activation=linear

[yolo]

mask = 4,5,6,7

anchors = 5, 10, 9, 23, 19, 17, 17, 41, 37, 32, 31, 72, 65, 65, 97,134

classes=10

num=8

jitter=.3

ignore_thresh = .7

truth_thresh = 1

scale_x_y = 1.1

iou_thresh=0.213

cls_normalizer=1.0

iou_normalizer=0.07

iou_loss=ciou

nms_kind=greedynms

训练(2080Ti, 32G, i5)

使用ultralytics的实现,内存小的去掉cache-images,指定img-size大小和网络大小相同可避免内部再次resize,需屏蔽以下行

assert math.fmod(imgsz_min, gs) == 0, '--img-size %g must be a %g-multiple' % (imgsz_min, gs)

如果中途中断则使用resume(官方不建议,直接重新train),应该是权重文件中存有optimizer等信息,如果担心修改参数后对再次训练有影响,可转换为darknet权重,

由于存在很多难分样本,将fl_gamma设为2可启用focal loss

python3 train_visdrone.py --cfg cfg/visdrone.cfg --data data/visdrone.data --weights weights/yolov4.conv.137 --cache-images --img-size 800 800 --epochs 300 --batch-size 2

python3 train_visdrone.py --cfg cfg/visdrone.cfg --data data/visdrone.data --resume --cache-images --img-size 800 800 --epochs 300 --batch-size 2

from models import *

convert('cfg/visdrone.cfg', 'weights/best.pt')

为了方便在Tensorboard中对比查看,可作如下改动:

# Tensorboard

if tb_writer:

# tags = ['train/giou_loss', 'train/obj_loss', 'train/cls_loss',

# 'metrics/precision', 'metrics/recall', 'metrics/mAP_0.5', 'metrics/F1',

# 'val/giou_loss', 'val/obj_loss', 'val/cls_loss']

# for x, tag in zip(list(mloss[:-1]) + list(results), tags):

# tb_writer.add_scalar(tag, x, epoch)

result_value = list(mloss[:-1]) + list(results)

tb_writer.add_scalars('giou_loss', {'train': result_value[0], 'val': result_value[7]}, epoch)

tb_writer.add_scalars('obj_loss', {'train': result_value[1], 'val': result_value[8]}, epoch)

tb_writer.add_scalars('cls_loss', {'train': result_value[2], 'val': result_value[9]}, epoch)

tb_writer.add_scalars('metrics_PR', {'precision': result_value[3], 'recall': result_value[4]}, epoch)

tb_writer.add_scalars('metrics_AF', {'mAP_0.5': result_value[5], 'F1': result_value[6]}, epoch)

验证:

python3 test.py --cfg cfg/visdrone.cfg --data data/visdrone.data --weights weights/best.pt --img-size 800

Class Images Targets P R mAP@0.5 F1:

all 548 3.88e+04 0.236 0.379 0.262 0.288

pedestrian 548 8.84e+03 0.17 0.253 0.152 0.203

people 548 5.12e+03 0.107 0.151 0.0496 0.125

bicycle 548 1.29e+03 0.125 0.19 0.0895 0.151

car 548 1.41e+04 0.458 0.644 0.578 0.535

van 548 1.98e+03 0.196 0.539 0.376 0.287

truck 548 750 0.259 0.489 0.342 0.338

tricycle 548 1.04e+03 0.284 0.366 0.234 0.32

awning-tricycle 548 532 0.166 0.28 0.108 0.208

bus 548 251 0.397 0.554 0.504 0.463

motor 548 4.89e+03 0.198 0.325 0.187 0.246

Speed: 20.0/148.7/168.7 ms inference/NMS/total per 800x800 image at batch-size 16

修改visdrone.data中valid的路径为VisDrone2019-DET-test-dev所在路径可验证测试集

Class Images Targets P R mAP@0.5 F1:

all 1.61e+03 7.51e+04 0.224 0.333 0.231 0.264

pedestrian 1.61e+03 2.1e+04 0.121 0.141 0.0814 0.13

people 1.61e+03 6.38e+03 0.0615 0.0593 0.0141 0.0604

bicycle 1.61e+03 1.3e+03 0.141 0.144 0.0709 0.143

car 1.61e+03 2.81e+04 0.38 0.581 0.486 0.46

van 1.61e+03 5.77e+03 0.213 0.463 0.309 0.292

truck 1.61e+03 2.66e+03 0.26 0.549 0.411 0.353

tricycle 1.61e+03 530 0.134 0.275 0.0989 0.18

awning-tricycle 1.61e+03 599 0.236 0.289 0.15 0.259

bus 1.61e+03 2.94e+03 0.536 0.611 0.58 0.571

motor 1.61e+03 5.84e+03 0.161 0.222 0.112 0.187

Speed: 20.2/178.3/198.5 ms inference/NMS/total per 800x800 image at batch-size 16

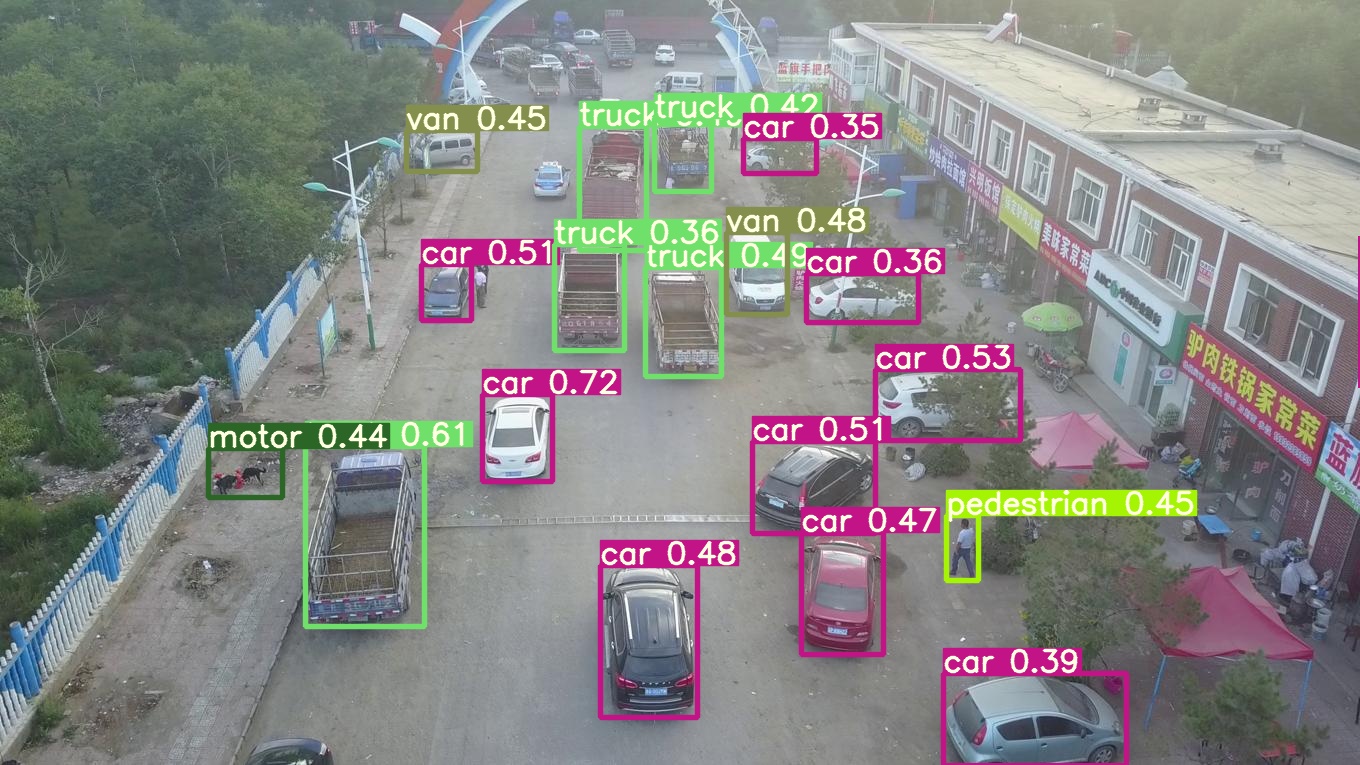

可以看出在小目标上效果很差,有时间用rcnn试试。

最终配置和权重下载,提取码: 74s4

标签:03,yolov4,训练,cfg,result,visdrone,548,data 来源: https://www.cnblogs.com/chenzhengxi/p/13033124.html