normalization, standardization and regularization

作者:互联网

how do you normalize your ged matrix.

from 机器学习里的黑色艺术:normalization, standardization, regularization;

第一部分:大的层面上讲

1. normalization和standardization是差不多的,都是把数据进行前处理,从而使数值都落入到统一的数值范围,从而在建模过程中,各个特征量没差别对待。normalization一般是把数据限定在需要的范围,比如一般都是【0,1】,从而消除了数据量纲对建模的影响。standardization 一般是指将数据正态化,使平均值0方差为1. 因此normalization和standardization 是针对数据而言的,消除一些数值差异带来的特种重要性偏见。经过归一化的数据,能加快训练速度,促进算法的收敛。

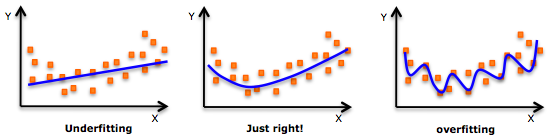

2.而regularization是在cost function里面加惩罚项,增加建模的模糊性,从而把捕捉到的趋势从局部细微趋势,调整到整体大概趋势。虽然一定程度上的放宽了建模要求,但是能有效防止over-fitting的问题(如图,来源于网上),增加模型准确性。因此,regularization是针对模型而言。

这三个term说的是不同的事情。

第二部分:方法

总结下normalization, standardization,和regularization的方法。

Normalization 和 Standardization

(1).最大最小值normalization: x'=(x-min)/(max-min). 这种方法的本质还是线性变换,简单直接。缺点就是新数据的加入,可能会因数值范围的扩大需要重新regularization。

(2). 对数归一化:x'=log10(x)/log10(xmax)或者log10(x)。推荐第一种,除以最大值,这样使数据落到【0,1】区间

(3).反正切归一化。x'=2atan(x)/pi。能把数据投影到【-1,1】区间。

(4).zero mean normalization归一化,也是standardization. x'=(x-mean)/std.

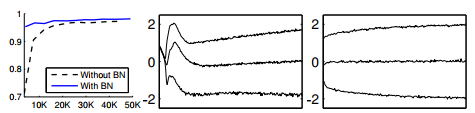

有无normalization,模型的学习曲线是不一样的,甚至会收敛结果不一样。比如在深度学习中,batch normalization有无,学习曲线对比是这样的:图一 蓝色线有batch normalization (BN),黑色虚线是没有BN. 黑色线放大,是图二的样子,蓝色线放大是图三的样子。reference:Batch Normalization: Accelerating Deep Network Training by Reducing

Internal Covariate Shift, Sergey Ioffe.

Regularization 方法

一般形式,应该是 min , R是regularization term。一般方法有

- L1 regularization: 对整个绝对值只和进行惩罚。

- L2 regularization:对系数平方和进行惩罚。

- Elastic-net 混合regularization。

from Differences between normalization, standardization and regularization;

Normalization

Normalization usually rescales features to [0,1][0,1].1 That is,

x′=x−min(x)max(x)−min(x)x′=x−min(x)max(x)−min(x)

It will be useful when we are sure enough that there are no anomalies (i.e. outliers) with extremely large or small values. For example, in a recommender system, the ratings made by users are limited to a small finite set like {1,2,3,4,5}{1,2,3,4,5}.

In some situations, we may prefer to map data to a range like [−1,1][−1,1] with zero-mean.2 Then we should choose mean normalization.3

x′=x−mean(x)max(x)−min(x)x′=x−mean(x)max(x)−min(x)

In this way, it will be more convenient for us to use other techniques like matrix factorization.

Standardization

Standardization is widely used as a preprocessing step in many learning algorithms to rescale the features to zero-mean and unit-variance.3

x′=x−μσx′=x−μσ

Regularization

Different from the feature scaling techniques mentioned above, regularization is intended to solve the overfitting problem. By adding an extra part增加惩罚项 to the loss function, the parameters in learning algorithms are more likely to converge to smaller values, which can significantly reduce overfitting.

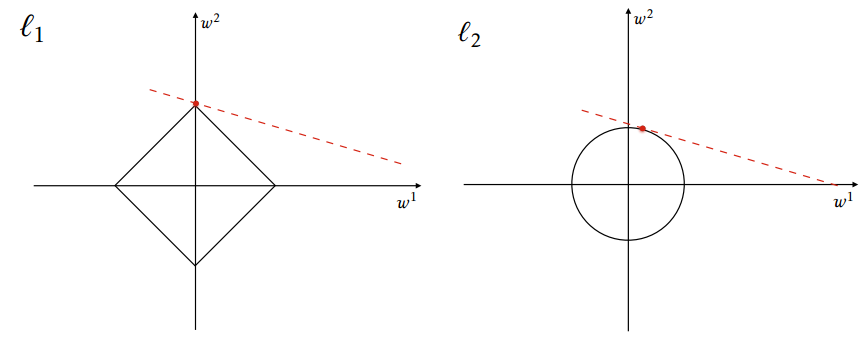

There are mainly two basic types of regularization: L1-norm (lasso) and L2-norm (ridge regression).4

L1-norm5

The original loss function is denoted by f(x)f(x), and the new one is F(x)F(x).

F(x)=f(x)+λ∥x∥1F(x)=f(x)+λ‖x‖1

where

∥x∥p=p ⎷n∑i=1|xi|p‖x‖p=∑i=1n|xi|pp

L1 regularization is better when we want to train a sparse model, since the absolute value function is not differentiable at 0.

L2-norm56

F(x)=f(x)+λ∥x∥22F(x)=f(x)+λ‖x‖22

L2 regularization is preferred in ill-posed problems for smoothing.

Here is a comparison between L1 and L2 regularizations.

From https://en.wikipedia.org/wiki/Regularization_(mathematics)

References

-

https://stats.stackexchange.com/a/10298 ↩

-

https://www.quora.com/What-is-the-difference-between-normalization-standardization-and-regularization-for-data/answer/Enzo-Tagliazucchi?share=c48b6752&srid=51VPj ↩

-

https://en.wikipedia.org/wiki/Regularization_%28mathematics%29 ↩

-

https://www.quora.com/What-is-the-difference-between-L1-and-L2-regularization-How-does-it-solve-the-problem-of-overfitting-Which-regularizer-to-use-and-when/answer/Kenneth-Tran?share=400c336d&srid=51VPj ↩ ↩2

-

https://en.wikipedia.org/wiki/Ridge_regression ↩

标签:regularization,standardization,min,https,normalization,mean 来源: https://www.cnblogs.com/dulun/p/11760301.html