clickhouse 集群rpm包方式署配置

作者:互联网

clickhouse 集群rpm包方式署配置

标签(空格分隔): ClickHouse系列

[toc]

一:clickhouse 简介

1.1 clickhouse 数据库简介

clickhouse 是 2016 年 俄罗斯yandex 公司开源出来的一款MPP架构(大规模并行处理)的列式数据库,主要用于大数据分析(OLAP)领域,具有快速查询,线性可扩展,功能 丰富,硬件利用效率高,容错,高度可靠等优点。

ClickHouse的主要应用场景:

电信行业用于存储数据和统计数据使用

用户行为数据记录与分析

信息安全日志分析

商业智能与广告网络价值数据挖掘分析

网络游戏以及物联网的数据处理与分析。

clickhouse 与其它的数据查询对比

https://clickhouse.tech/benchmark/dbms/二: clickhouse单机版本部署

2.1 系统环境准备

系统:CentOS7.9x64

拥有sudo权限的非root用户,如:clickhouse

cat /etc/hosts

----

192.168.100.11 node01.flyfish.cn

192.168.100.12 node02.flyfish.cn

192.168.100.13 node03.flyfish.cn

192.168.100.14 node04.flyfish.cn

192.168.100.15 node05.flyfish.cn

192.168.100.16 node06.flyfish.cn

192.168.100.17 node07.flyfish.cn

192.168.100.18 node08.flyfish.cn

----

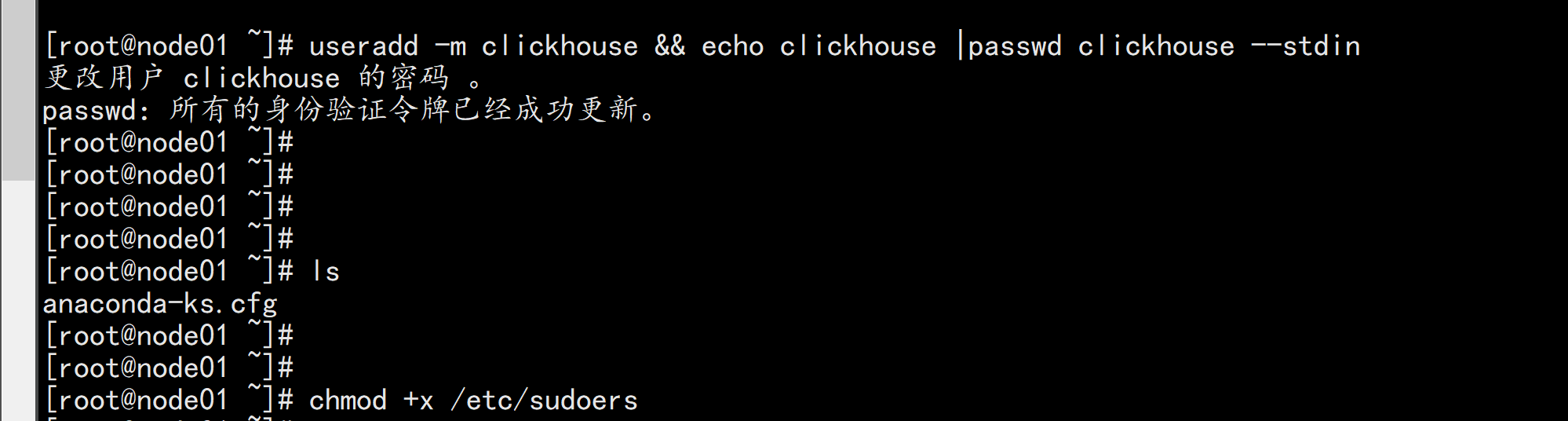

单机版本安装第一台, 集群安装 前4台useradd -m clickhouse && echo clickhouse |passwd clickhouse --stdin

给 clickhouse 用户提权:

chmod +x /etc/sudoers

vim /etc/sudoers

---

clickhouse ALL=(ALL) NOPASSWD:ALL

----

su - clickhouse

sudo su

###2.2. 单节点安装

sudo yum install yum-utils

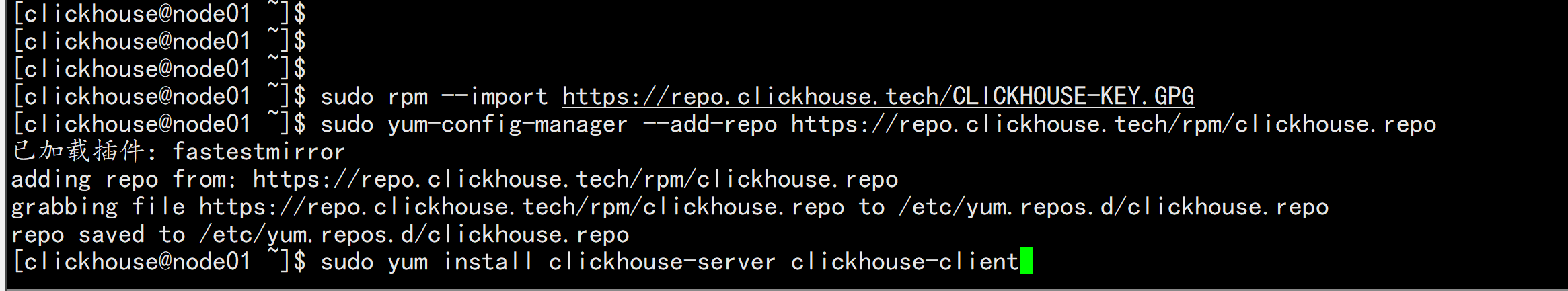

sudo rpm --import https://repo.clickhouse.tech/CLICKHOUSE-KEY.GPG

sudo yum-config-manager --add-repo https://repo.clickhouse.tech/rpm/clickhouse.repo

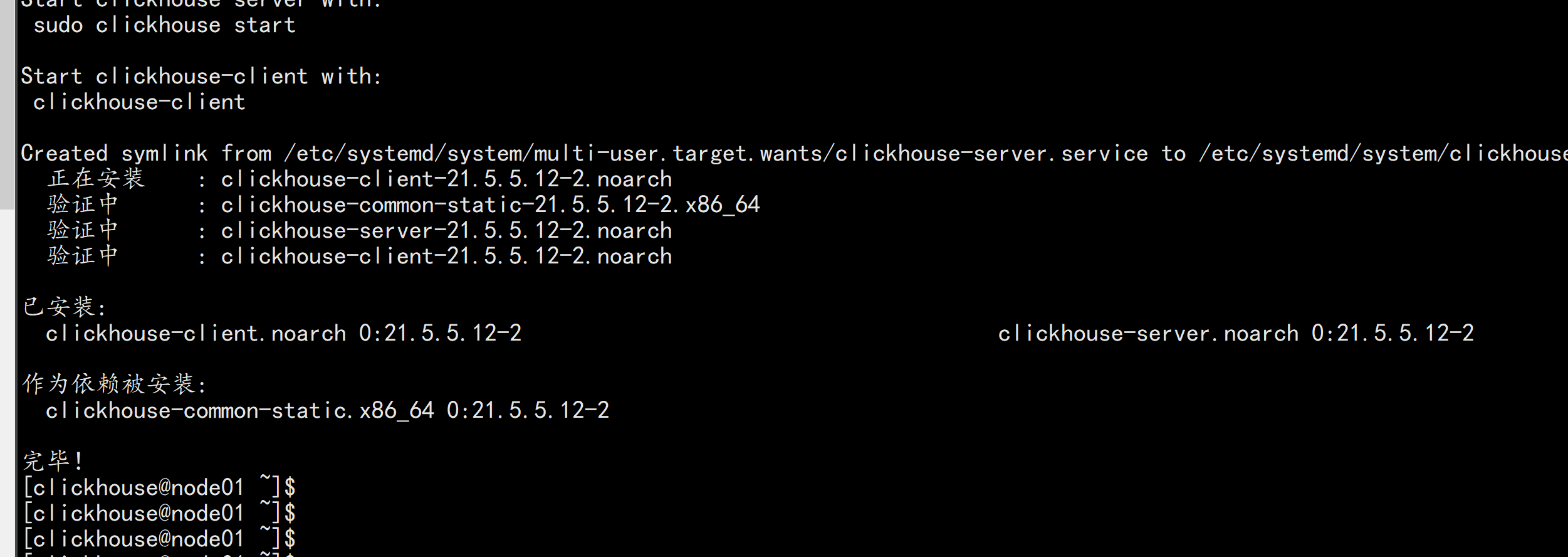

sudo yum install clickhouse-server clickhouse-client

Server config files: /etc/clickhouse-server/

库数据位置:/var/lib/clickhouse/

日志目录:/var/log/clickhouse-server/

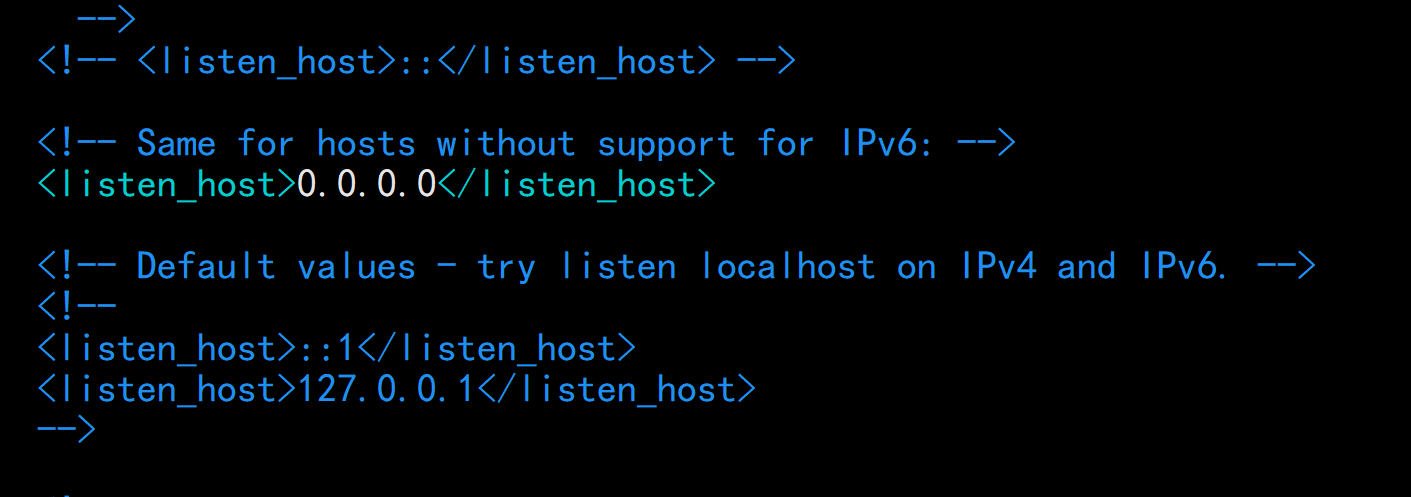

您已成功安装ClickHouse服务器和客户端。配置click喉house 的监听端口:

vim +159 /etc/clickhouse/config.xml

----

159 行

<listen_host>0.0.0.0</listen_host>

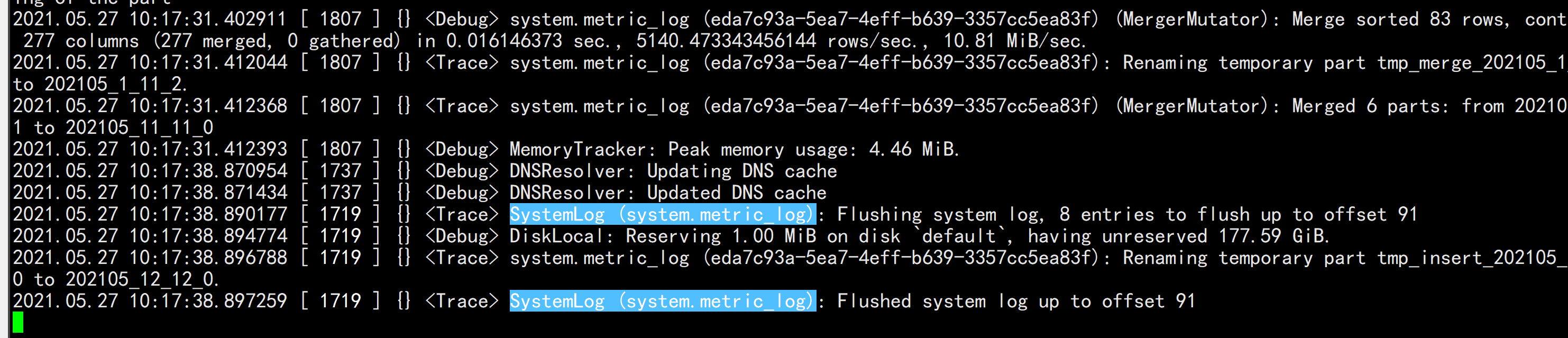

----启动clickhouse

sudo -u clickhouse clickhouse-server --config-file=/etc/clickhouse-server/config.xml

以服务方式启动

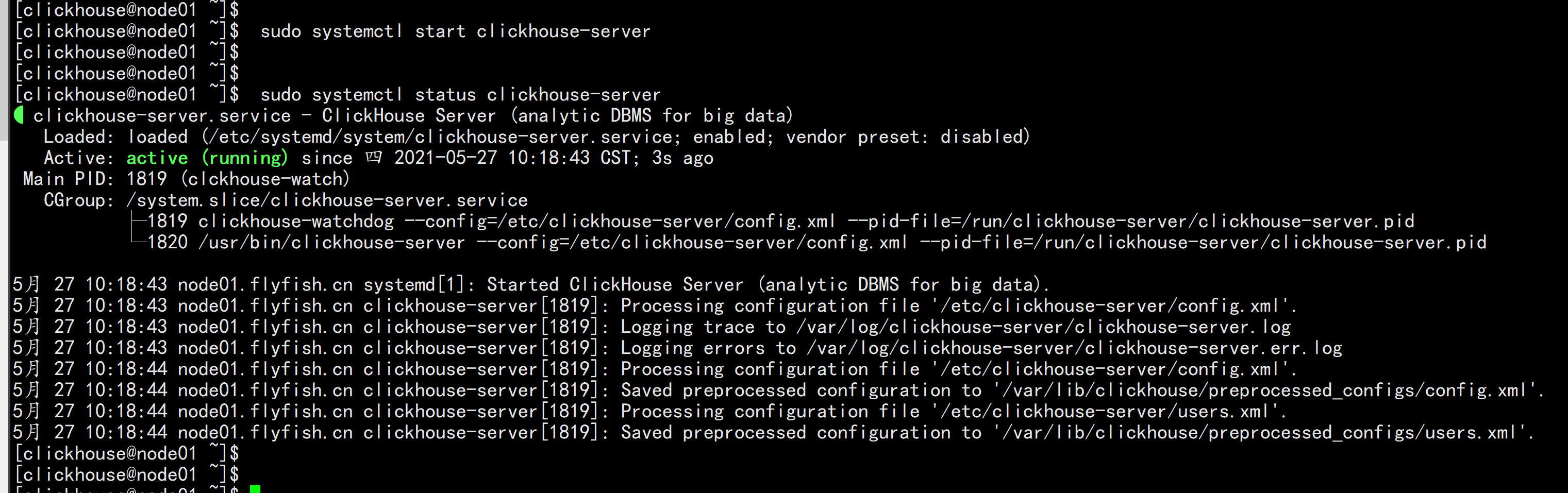

sudo systemctl start clickhouse-server

sudo systemctl stop clickhouse-server

sudo systemctl status clickhouse-server

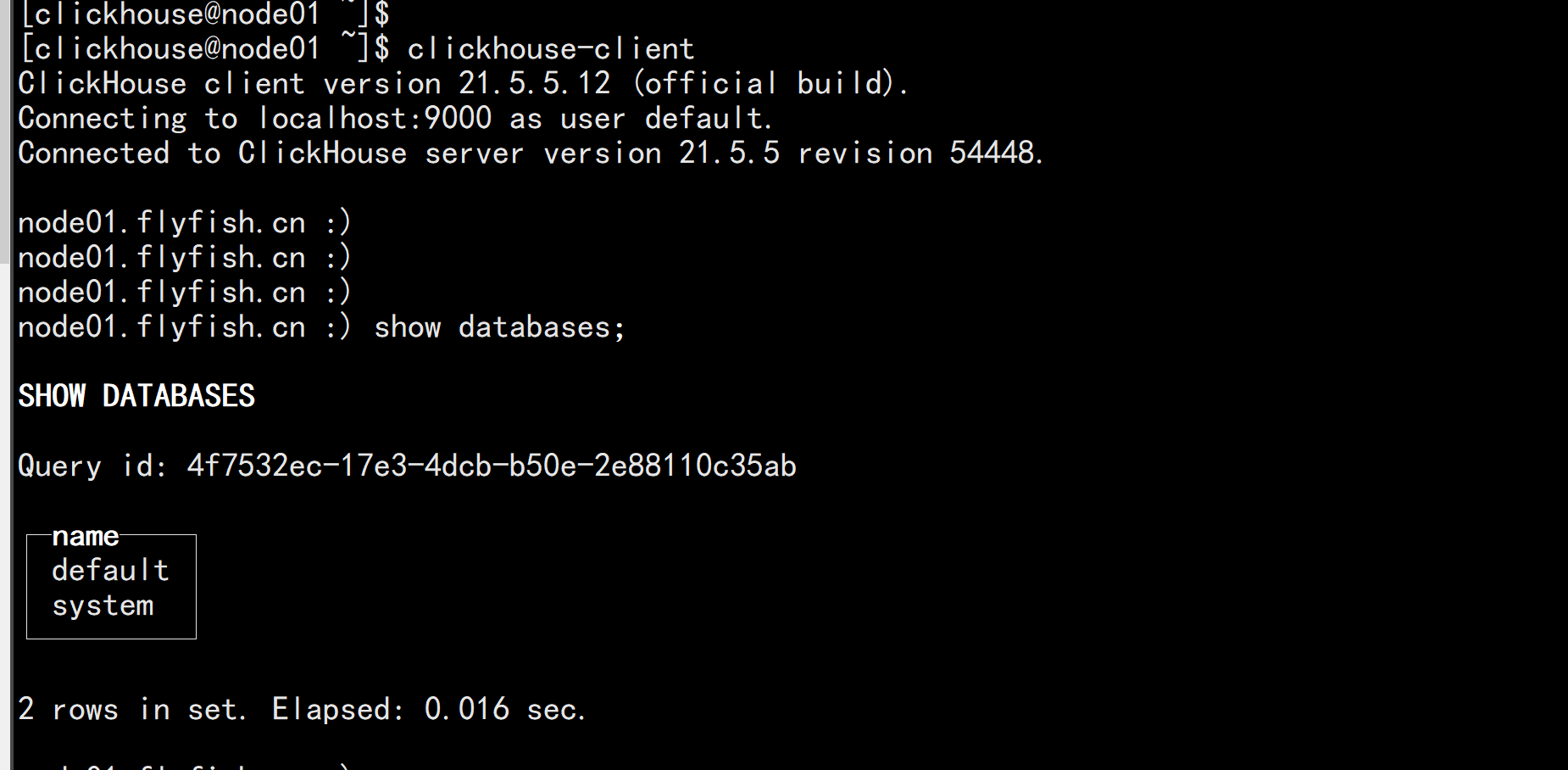

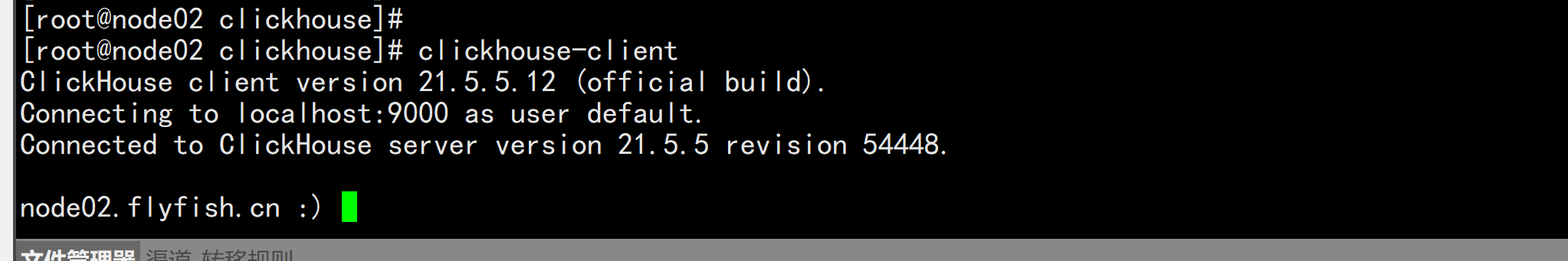

clickhouse-client

2.3 clickhouse 集群版本部署

2.3.1 部署 zookeeper 集群

在node02.flyfish.cn/node03.flyfish.cn/node04.flyfish.cn 部署zookeeper

mkdir -p /opt/bigdata/

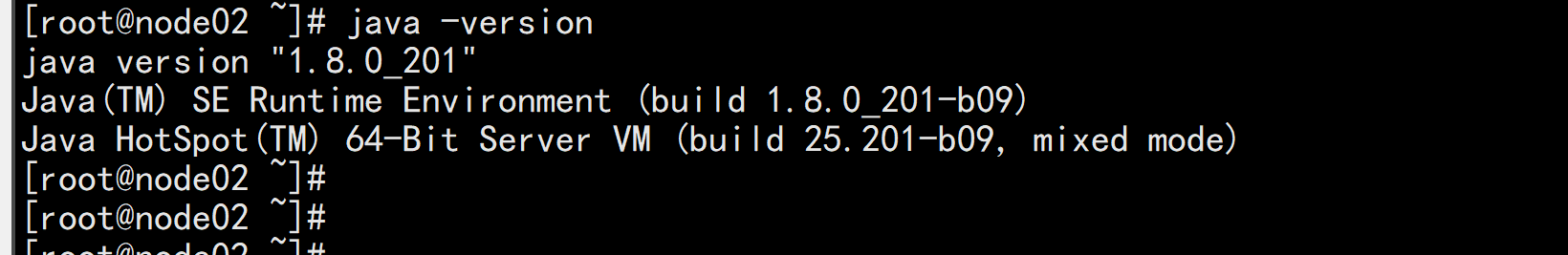

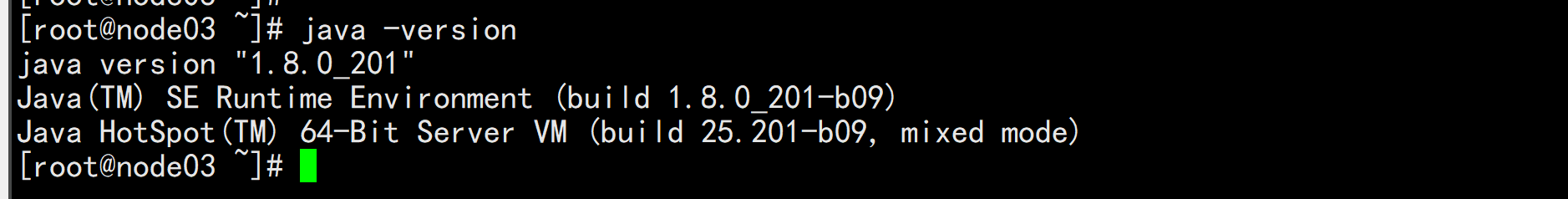

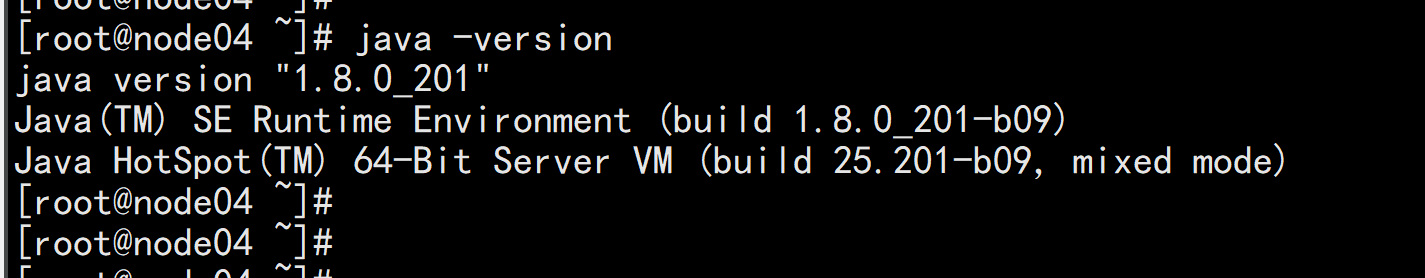

tar -zxvf jdk1.8.0_201.tar.gz

mv jdk1.8.0_201 /opt/bigdata/jdk

vim /etc/profile

---

### jdk

export JAVA_HOME=/opt/bigdata/jdk

export CLASSPATH=.:$JAVA_HOME/jre/lib:$JAVA_HOME/lib:$JAVA_HOME/lib/tools.jar

PATH=$PATH:$HOME/bin:$JAVA_HOME/bin

---

source /etc/profile

java -version

2.3.2 部署zookeeper

部署zookeeper

tar -zxvf zookeeper-3.4.14.tar.gz

mv zookeeper-3.4.14 /opt/bigdata/zookeeper

mkdir -p /opt/bigdata/zookeeper/data

mkdir -p /opt/bigdata/zookeeper/log

cd /opt/bigdata/zookeeper/data/

echo 1 > myid

----

cd /opt/bigdata/zookeeper/conf

cp zoo_sample.cfg zoo.cfg

vim zoo.cfg

----

# 心跳时间

tickTime=2000

# follow连接leader的初始化连接时间,表示tickTime的倍数

initLimit=10

# syncLimit配置表示leader与follower之间发送消息,请求和应答时间长度。如果followe在设置的时间内不能与leader进行通信,那么此follower将被丢弃,tickTime的倍数

syncLimit=5

# 客户端连接端口

clientPort=2181

# 节点数据存储目录,需要提前创建,注意myid添加,用于标识服务器节点

dataDir=/opt/bigdata/zookeeper/data

dataLogDir=/opt/bigdata/zookeeper/log

server.1=192.168.100.12:2888:3888

server.2=192.168.100.13:2888:3888

server.3=192.168.100.14:2888:3888

---

-----

scp -r zookeeper root@192.168.100.13:/usr/local/

scp -r zookeeper root@192.168.100.14:/usr/local/

修改192.168.100.13 节点 myid

cd /opt/bigdata/zookeeper/data/

echo 2 > myid

修改192.168.100.14 节点 myid

cd /opt/bigdata/zookeeper/data/

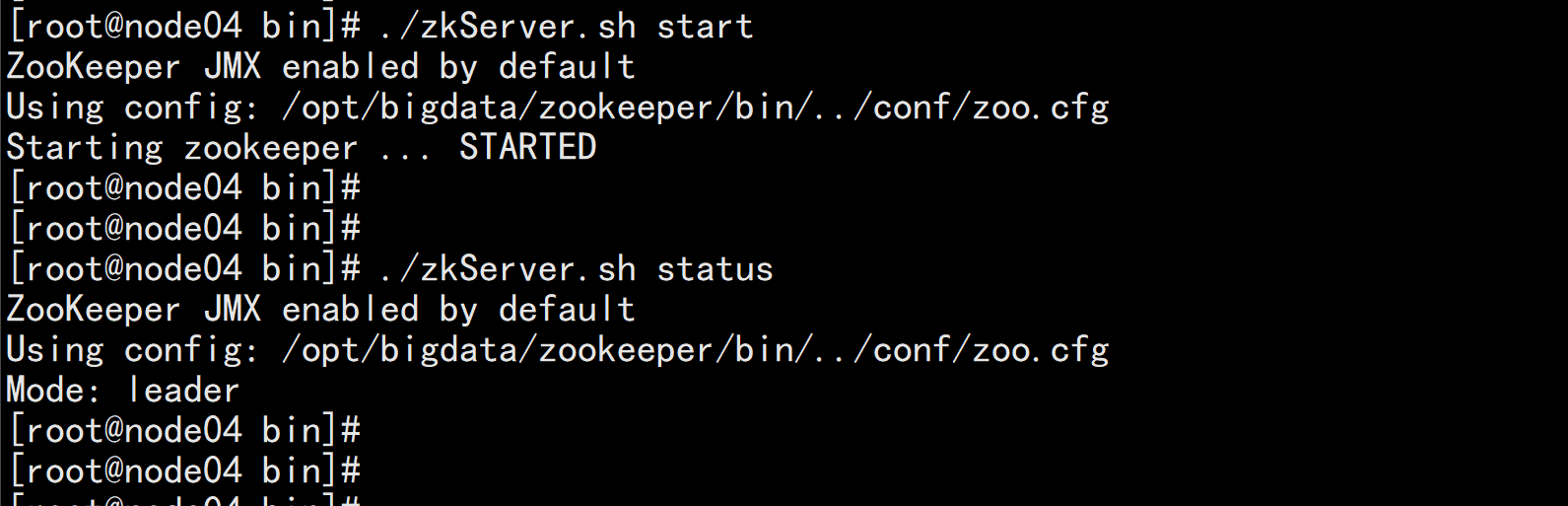

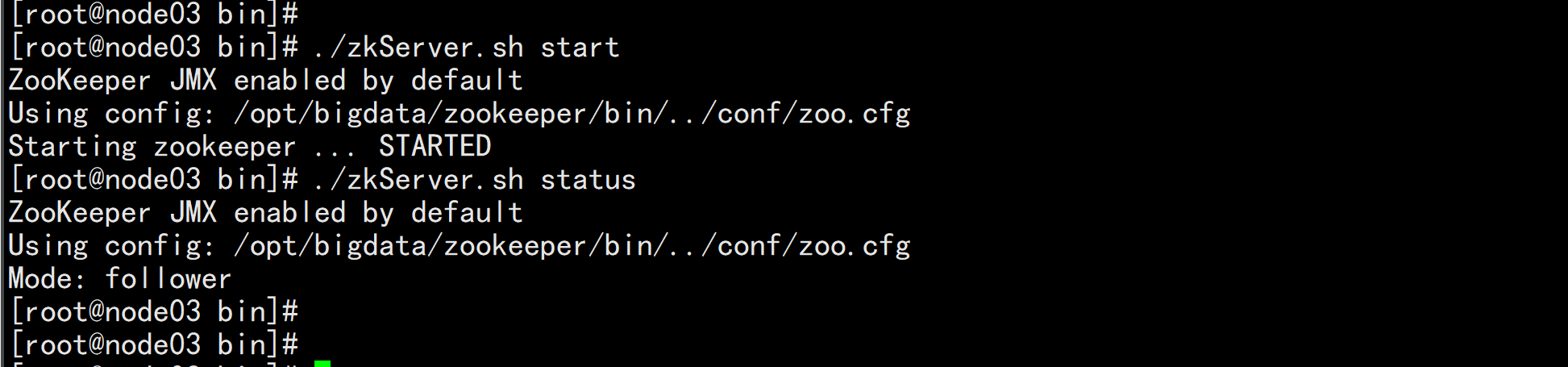

echo 3 > myid 启动zookeeper

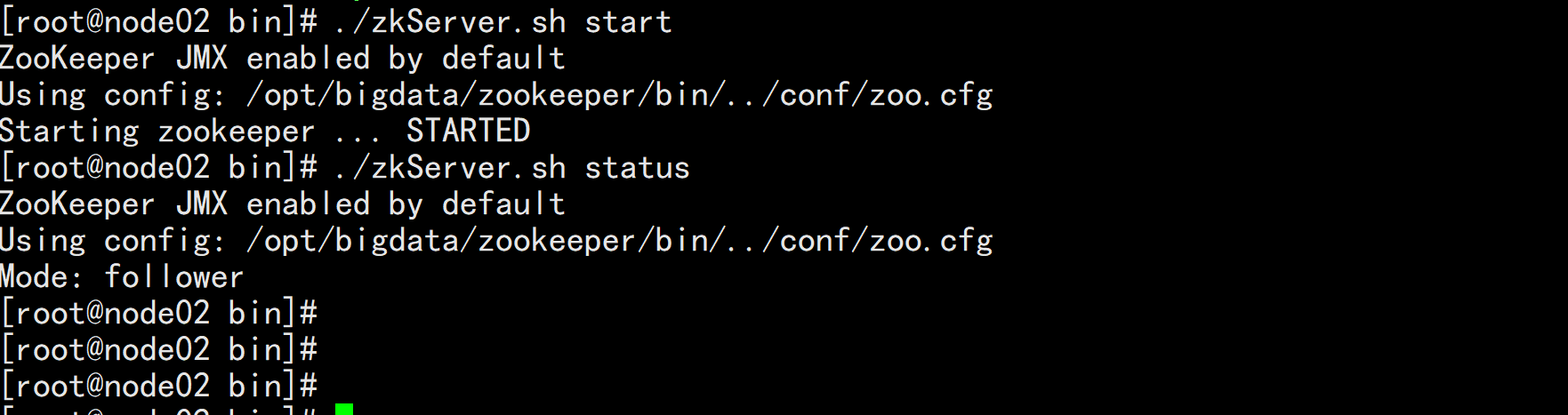

cd /opt/bigdata/zookeeper/bin/

./zkServer.sh start

2.3.3 部署clickhouse集群

所有节点安装clickhouse 同上单机配置

clickhouse-client

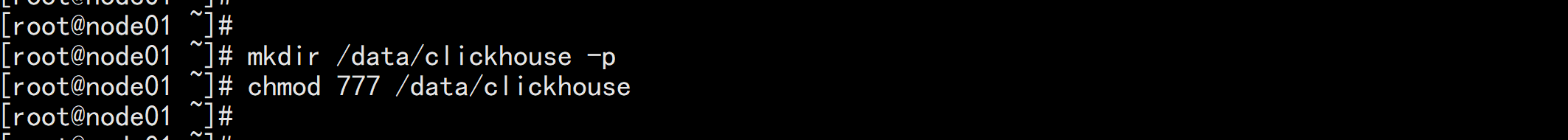

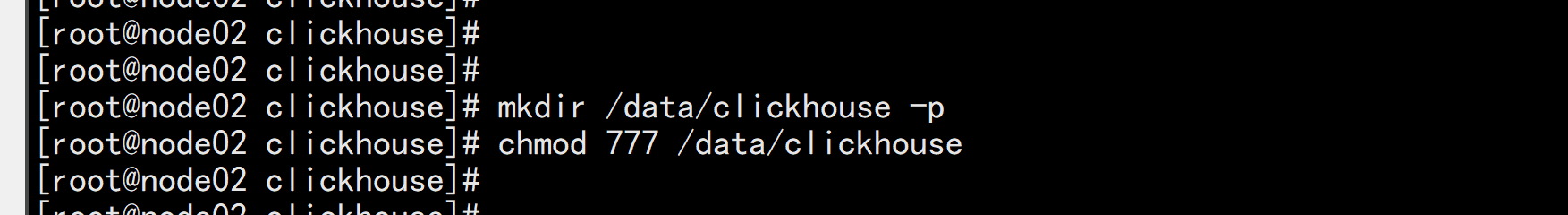

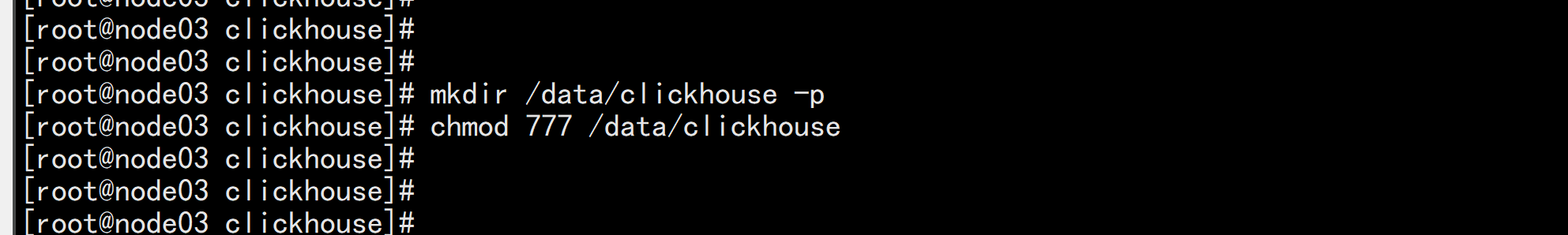

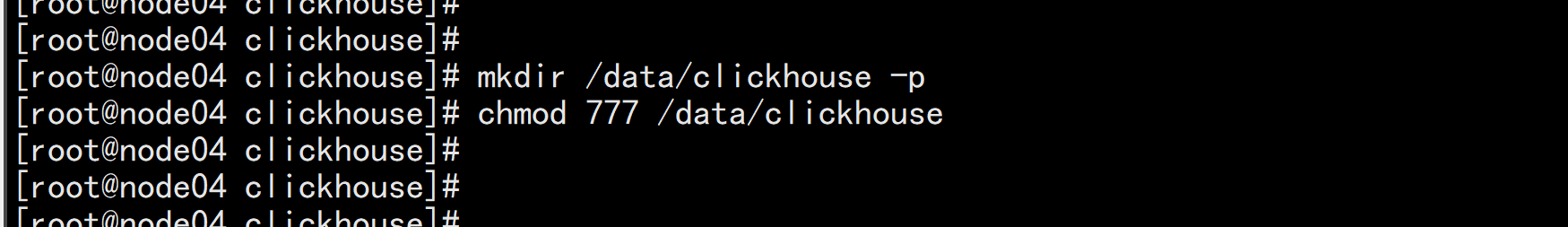

创建clickhouse 数据存放目录 (所有节点全部新建)

mkdir /data/clickhouse -p

chmod 777 /data/clickhouse

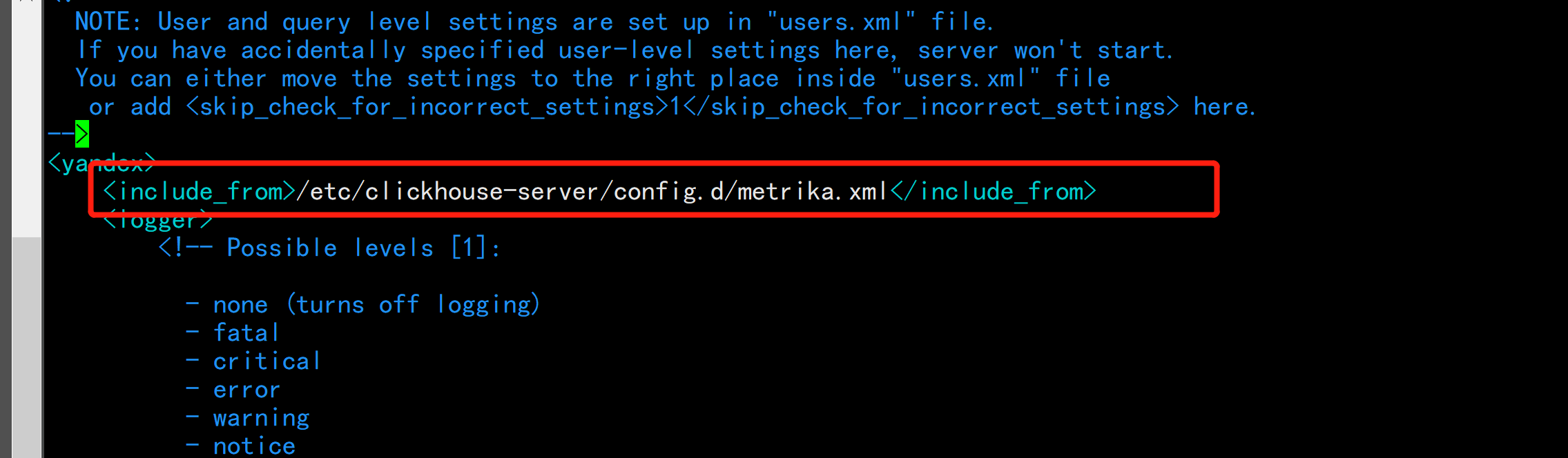

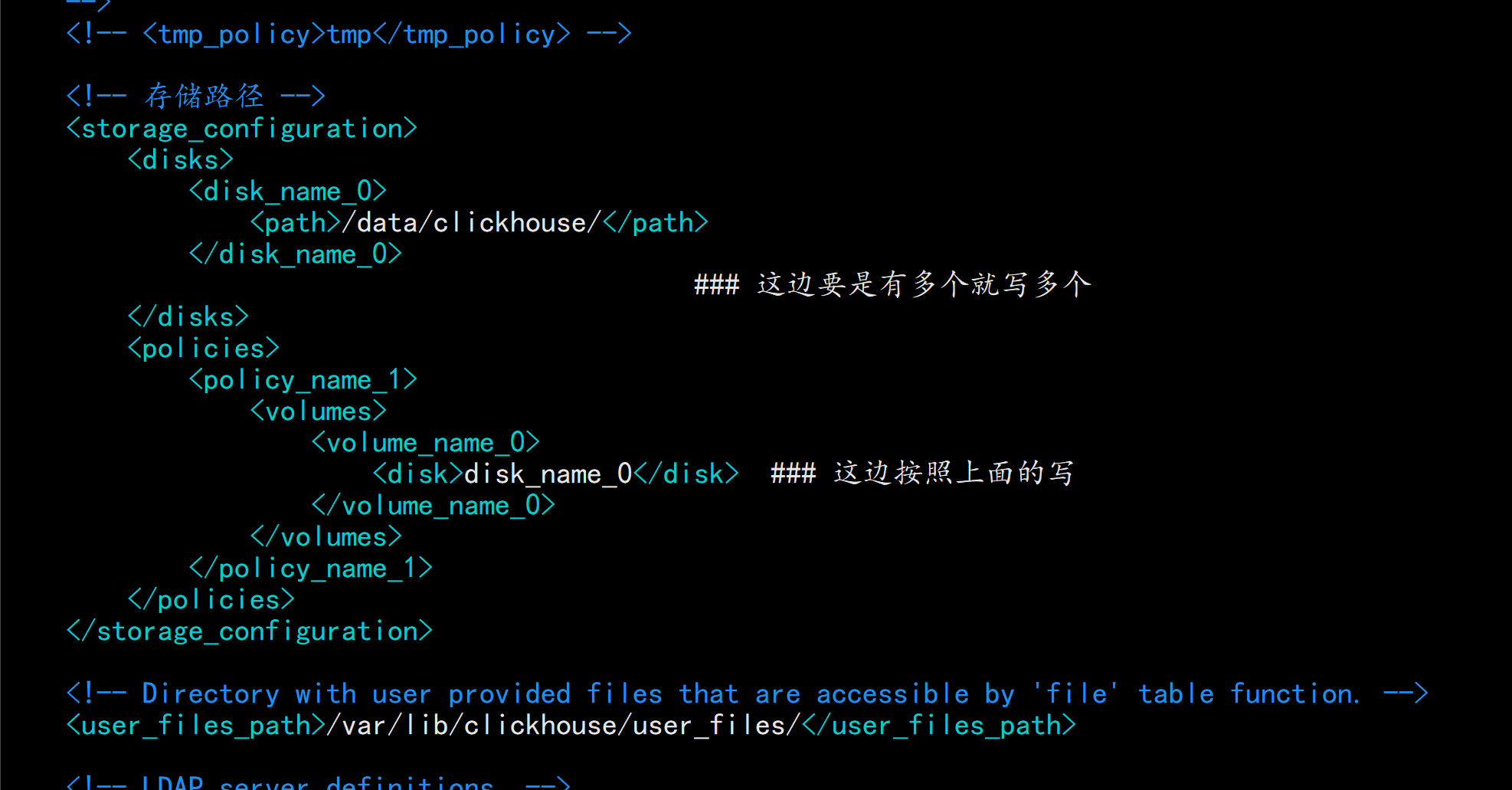

vim /etc/clickhouse-server/config.xml

---

<yandex>

<!--引入metrika.xml-->

<include_from>/etc/clickhouse-server/config.d/metrika.xml</include_from>

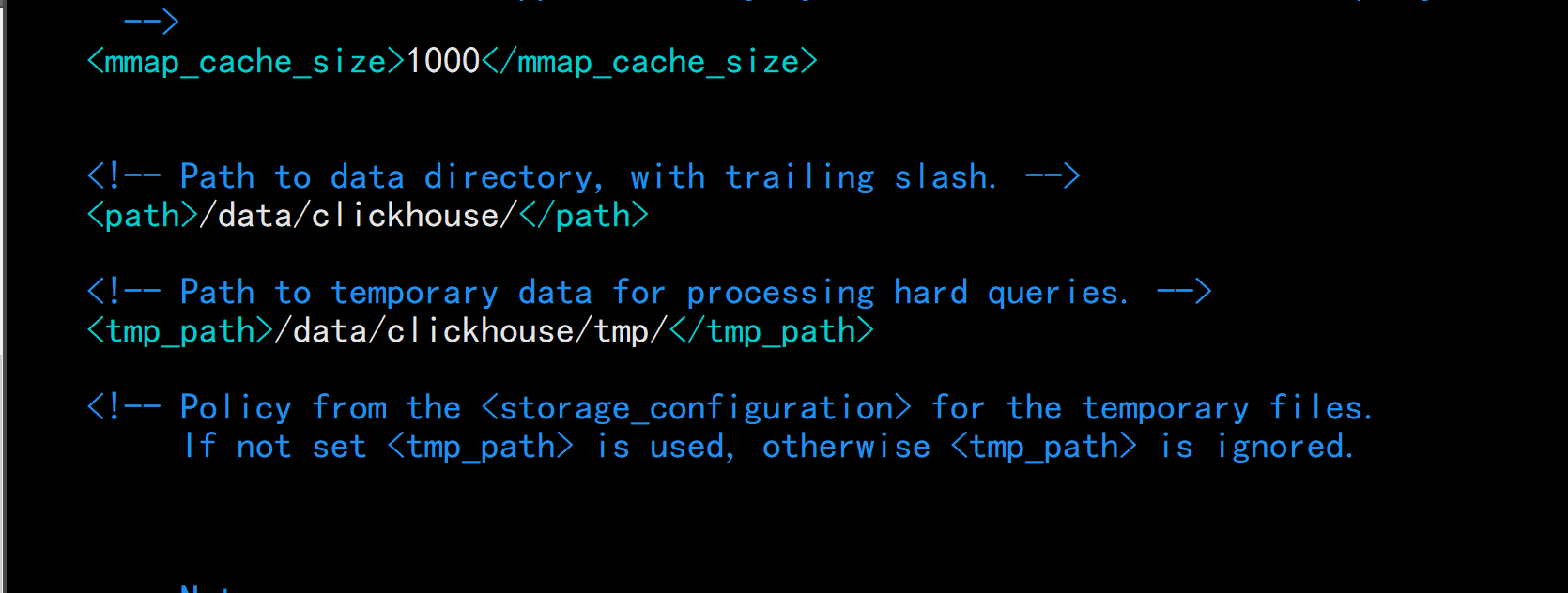

<!-- Path to data directory, with trailing slash. -->

<path>/data/clickhouse/</path>

<!-- Path to temporary data for processing hard queries. -->

<tmp_path> /data/clickhouse/tmp/</tmp_path>

<!-- <tmp_policy>tmp</tmp_policy> -->

<!-- 存储路径 -->

<storage_configuration>

<disks>

<disk_name_0>

<path>/data/clickhouse/</path>

</disk_name_0>

### 这边要是有多个就写多个

</disks>

<policies>

<policy_name_1>

<volumes>

<volume_name_0>

<disk>disk_name_0</disk> ### 这边按照上面的写

</volume_name_0>

</volumes>

</policy_name_1>

</policies>

</storage_configuration>

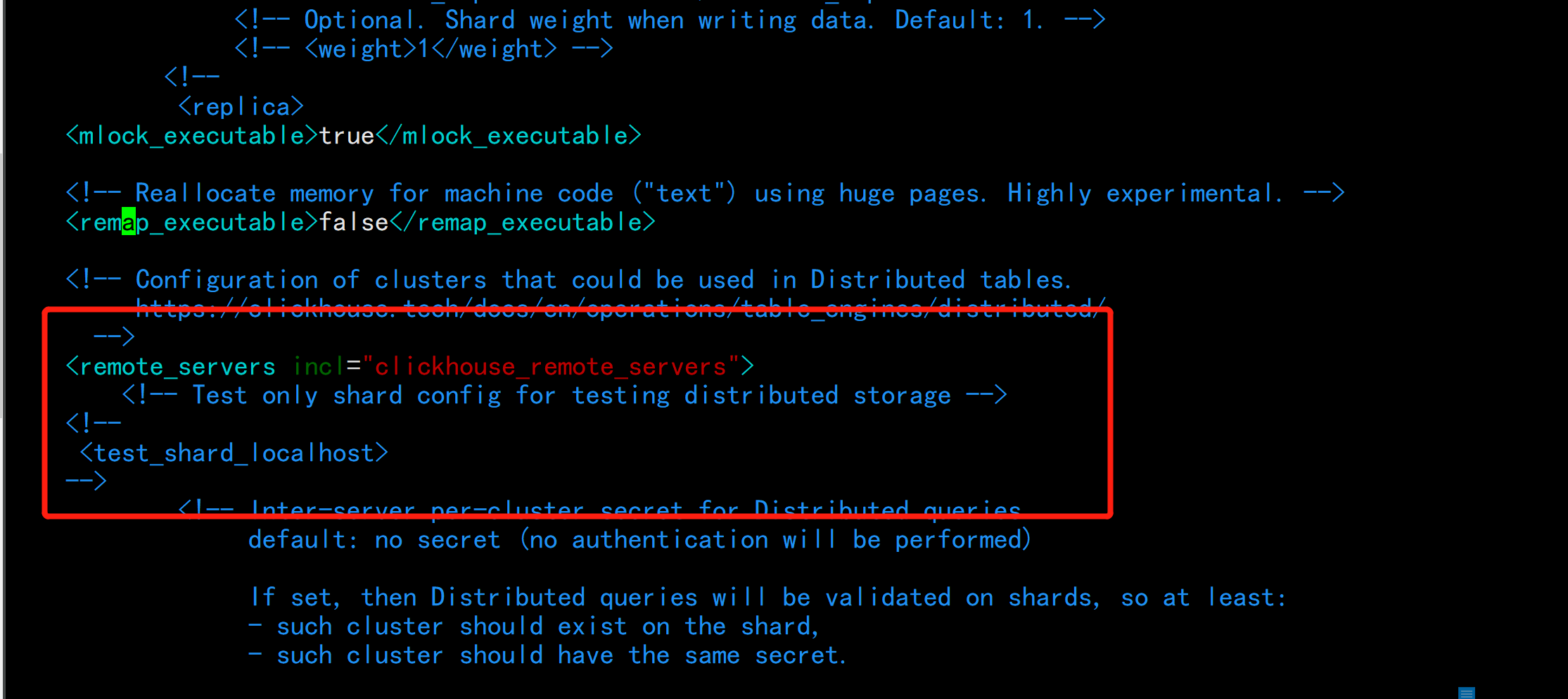

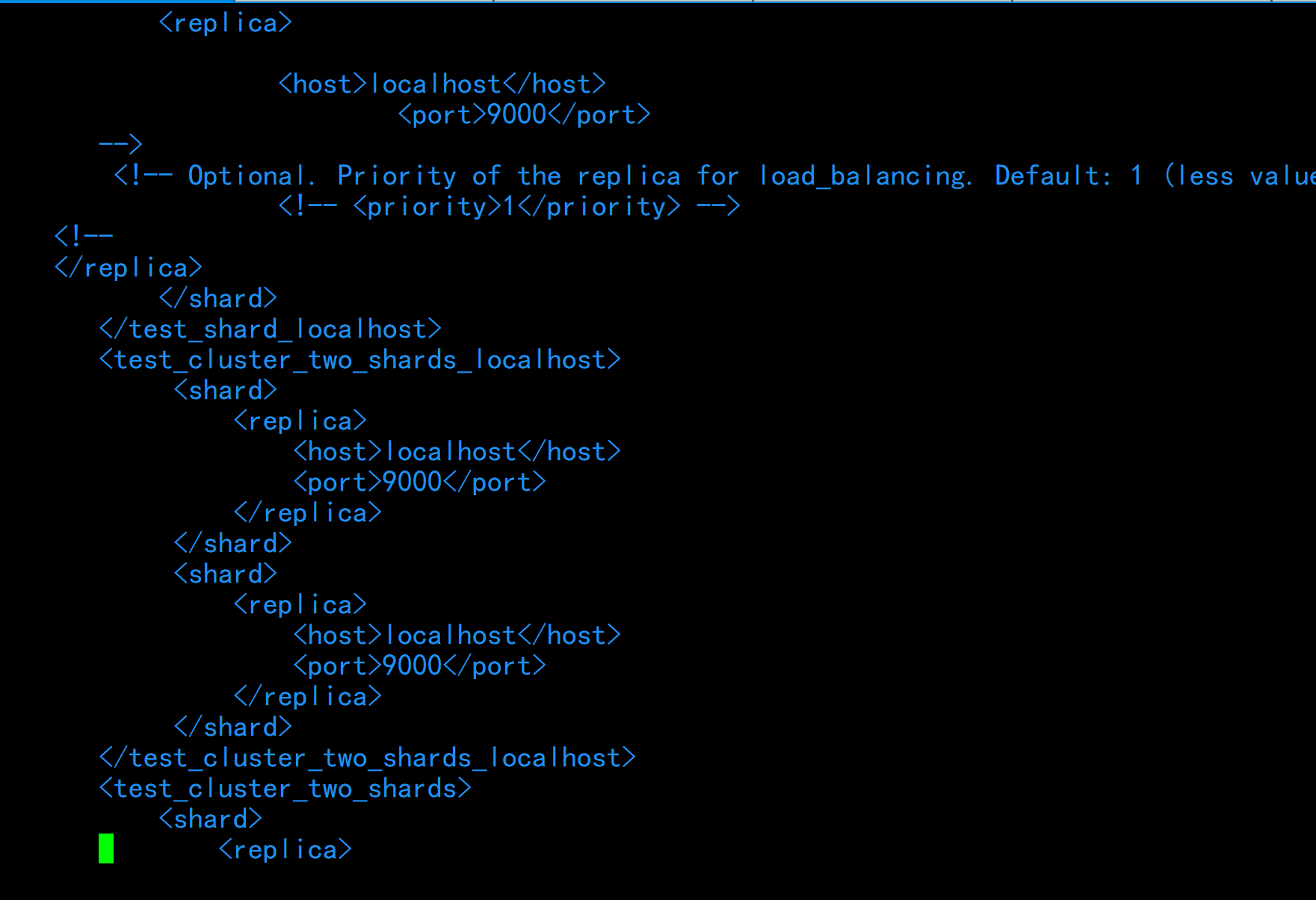

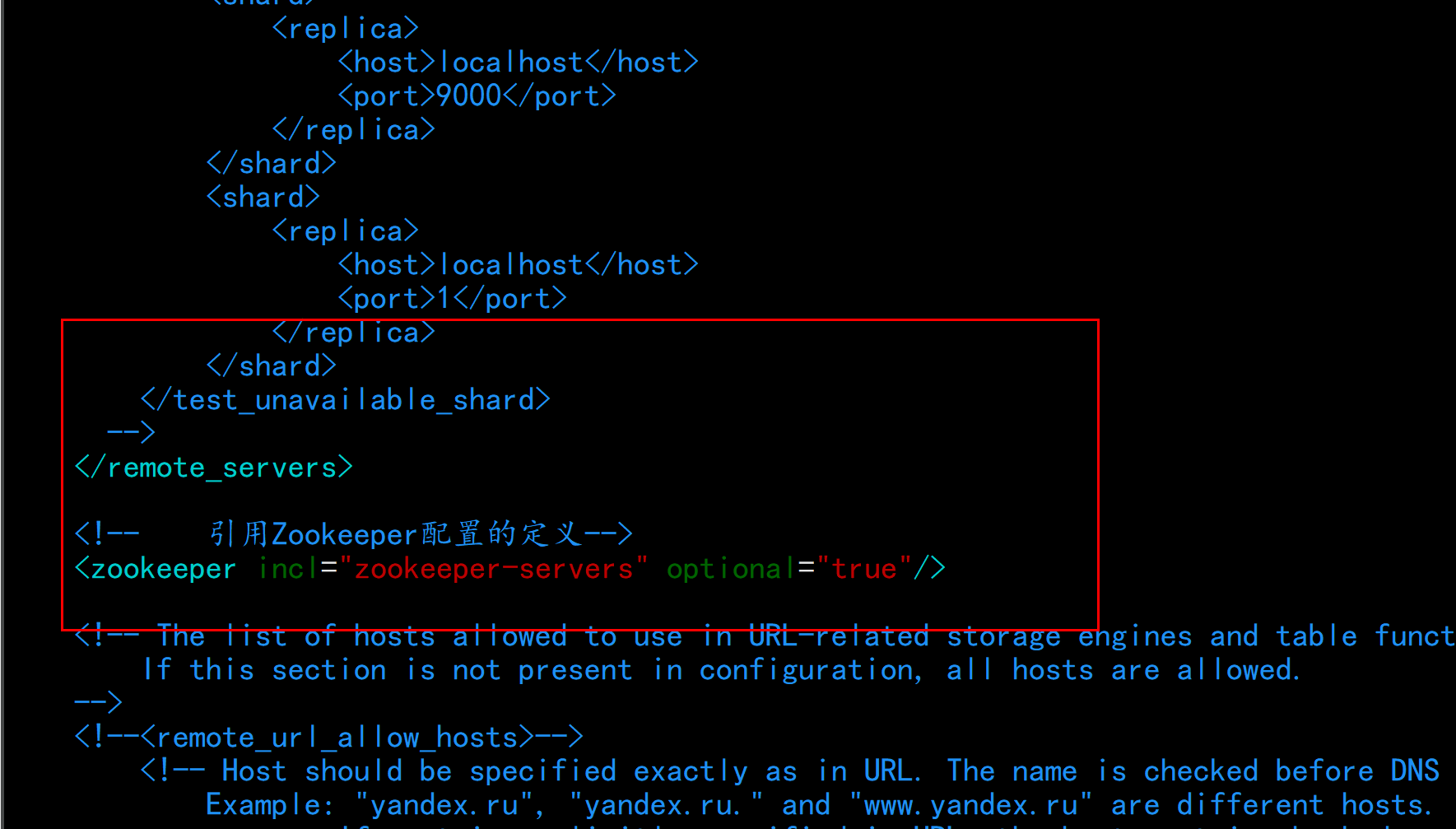

拿掉所有localhost的本地存储shared 分片不做显示:

<remote_servers incl="clickhouse_remote_servers" >

...... #### 全部注释掉

</remote_servers>

<!-- 引用Zookeeper配置的定义-->

<zookeeper incl="zookeeper-servers" optional="true"/>

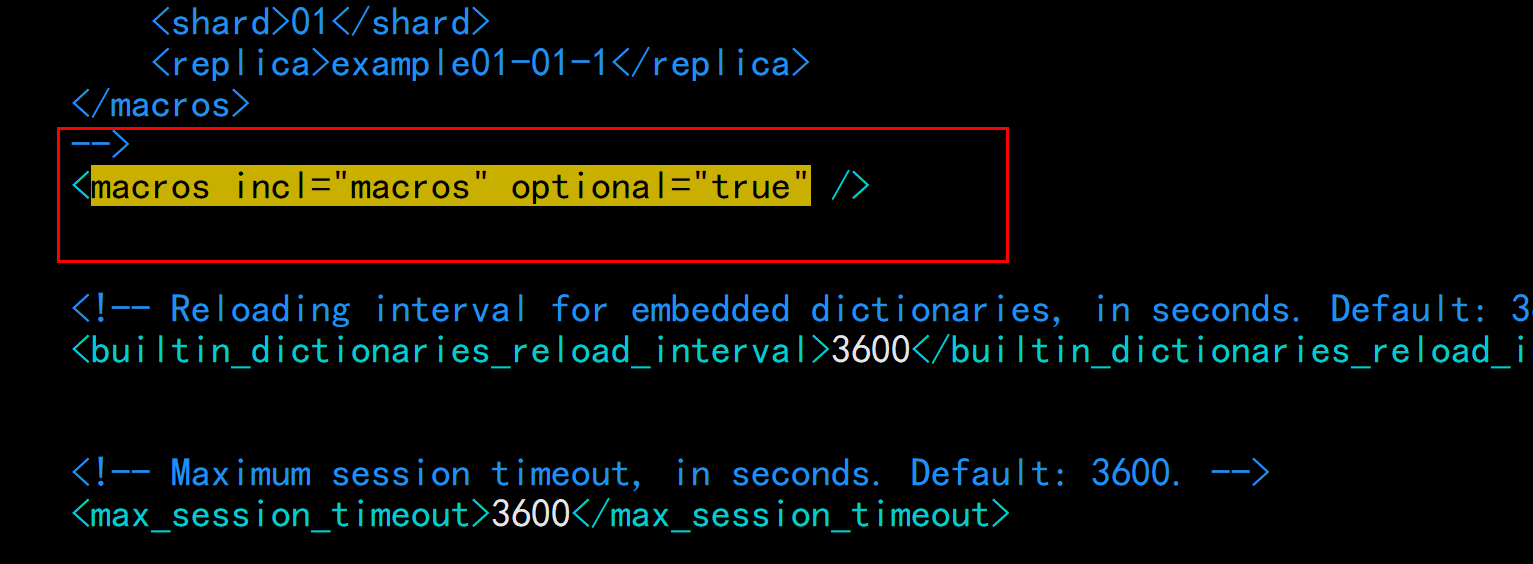

<macros incl="macros" optional="true" />

---

cd /etc/clickhouse-server/config.d/

vim metrika.xml

---

<yandex>

<clickhouse_remote_servers>

<!--集群名称,clickhouse支持多集群的模式-->

<clickhouse_cluster>

<!--定义分片节点,这里我指定3个分片,每个分片只有1个副本,也就是它本身-->

<shard>

<internal_replication>true</internal_replication>

<replica>

<host>node01.flyfish.cn</host>

<port>9000</port>

</replica>

</shard>

<shard>

<replica>

<internal_replication>true</internal_replication>

<host>node02.flyfish.cn</host>

<port>9000</port>

</replica>

</shard>

<shard>

<internal_replication>true</internal_replication>

<replica>

<host>node03.flyfish.cn</host>

<port>9000</port>

</replica>

</shard>

<shard>

<internal_replication>true</internal_replication>

<replica>

<host>node04.flyfish.cn</host>

<port>9000</port>

</replica>

</shard>

</clickhouse_cluster>

</clickhouse_remote_servers>

<!--zookeeper集群的连接信息-->

<zookeeper-servers>

<node index="1">

<host>node02.flyfish.cn</host>

<port>2181</port>

</node>

<node index="2">

<host>node03.flyfish.cn</host>

<port>2181</port>

</node>

<node index="3">

<host>node04.flyfish.cn</host>

<port>2181</port>

</node>

</zookeeper-servers>

<!--定义宏变量,后面需要用-->

<macros>

<replica>node04.flyfish.cn</replica> ### 在那台主机上面就写那个主机名

</macros>

<!--不限制访问来源ip地址-->

<networks>

<ip>::/0</ip>

</networks>

<!--数据压缩方式,默认为lz4-->

<clickhouse_compression>

<case>

<min_part_size>10000000000</min_part_size>

<min_part_size_ratio>0.01</min_part_size_ratio>

<method>lz4</method>

</case>

</clickhouse_compression>

</yandex>

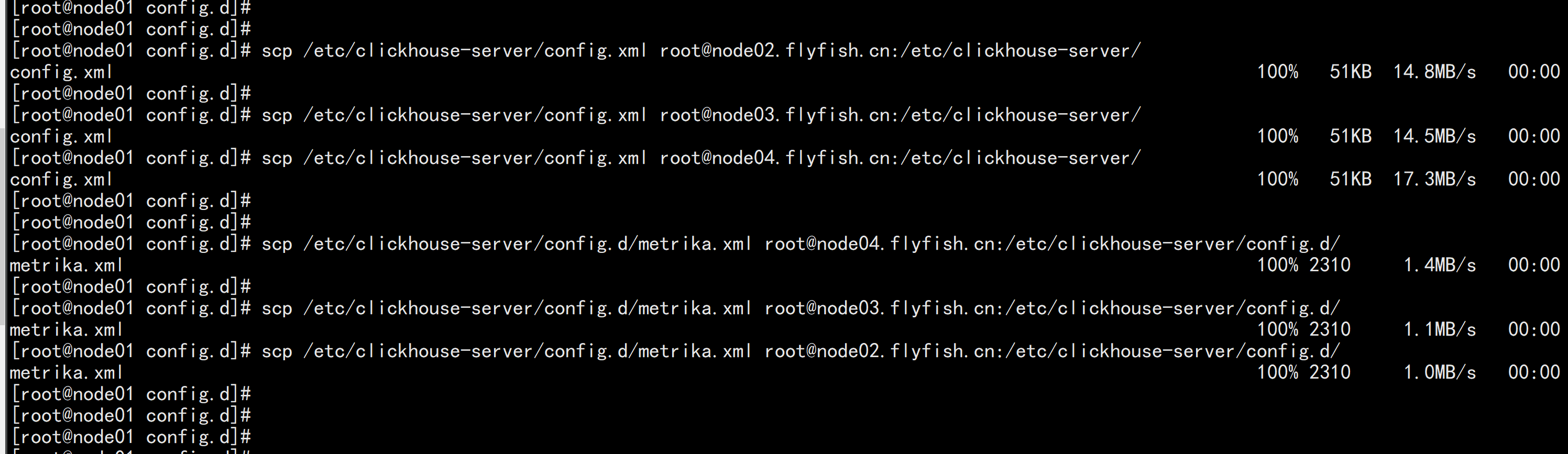

---同步所有主机:

scp /etc/clickhouse-server/config.xml root@node02.flyfish.cn:/etc/clickhouse-server/

scp /etc/clickhouse-server/config.xml root@node03.flyfish.cn:/etc/clickhouse-server/

scp /etc/clickhouse-server/config.xml root@node04.flyfish.cn:/etc/clickhouse-server/

scp /etc/clickhouse-server/config.d/metrika.xml root@node02.flyfish.cn:/etc/clickhouse-server/config.d/

scp /etc/clickhouse-server/config.d/metrika.xml root@node03.flyfish.cn:/etc/clickhouse-server/config.d/

scp /etc/clickhouse-server/config.d/metrika.xml root@node04.flyfish.cn:/etc/clickhouse-server/config.d/

修改所有主机的

<!--定义宏变量,后面需要用-->

<macros>

<replica>node04.flyfish.cn</replica> ### 在那台主机上面就写那个主机名 这边是node04.flyfish.cn 其它节点对应修改

</macros>

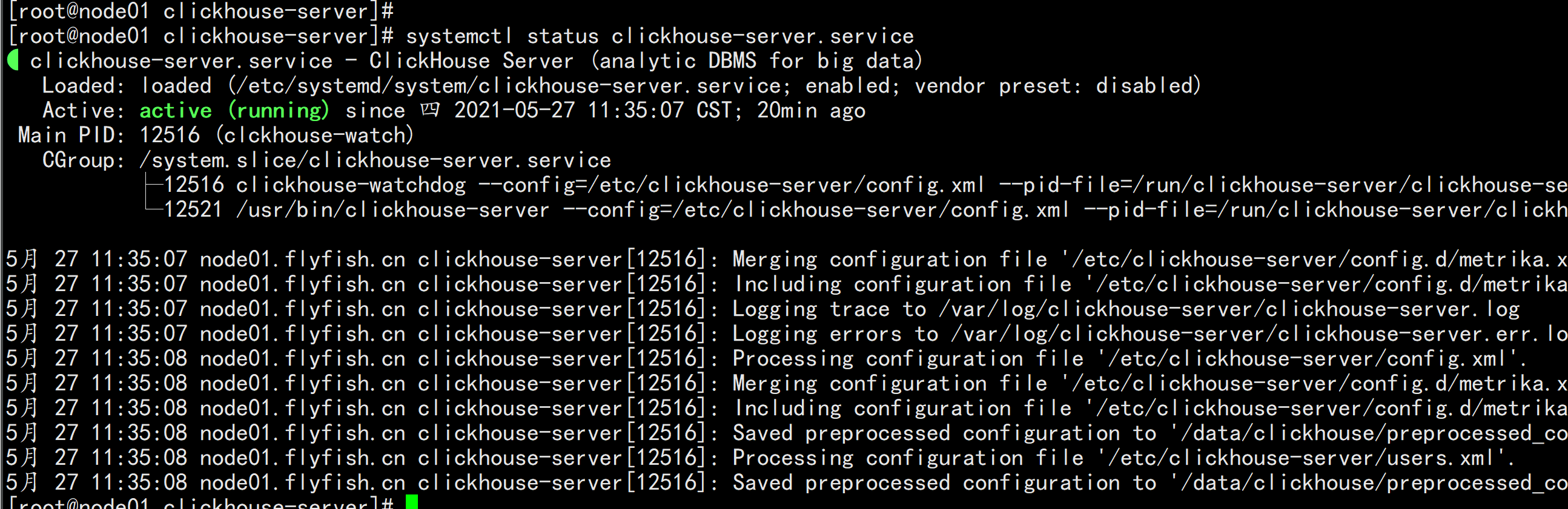

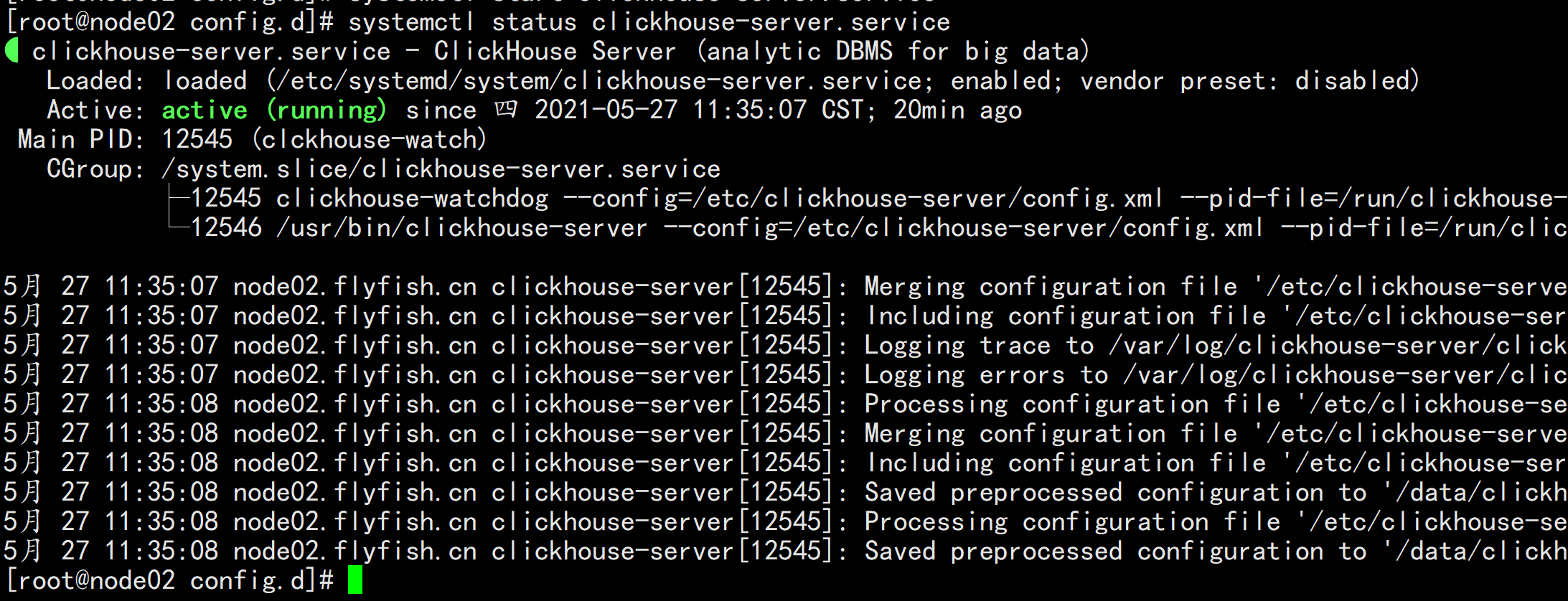

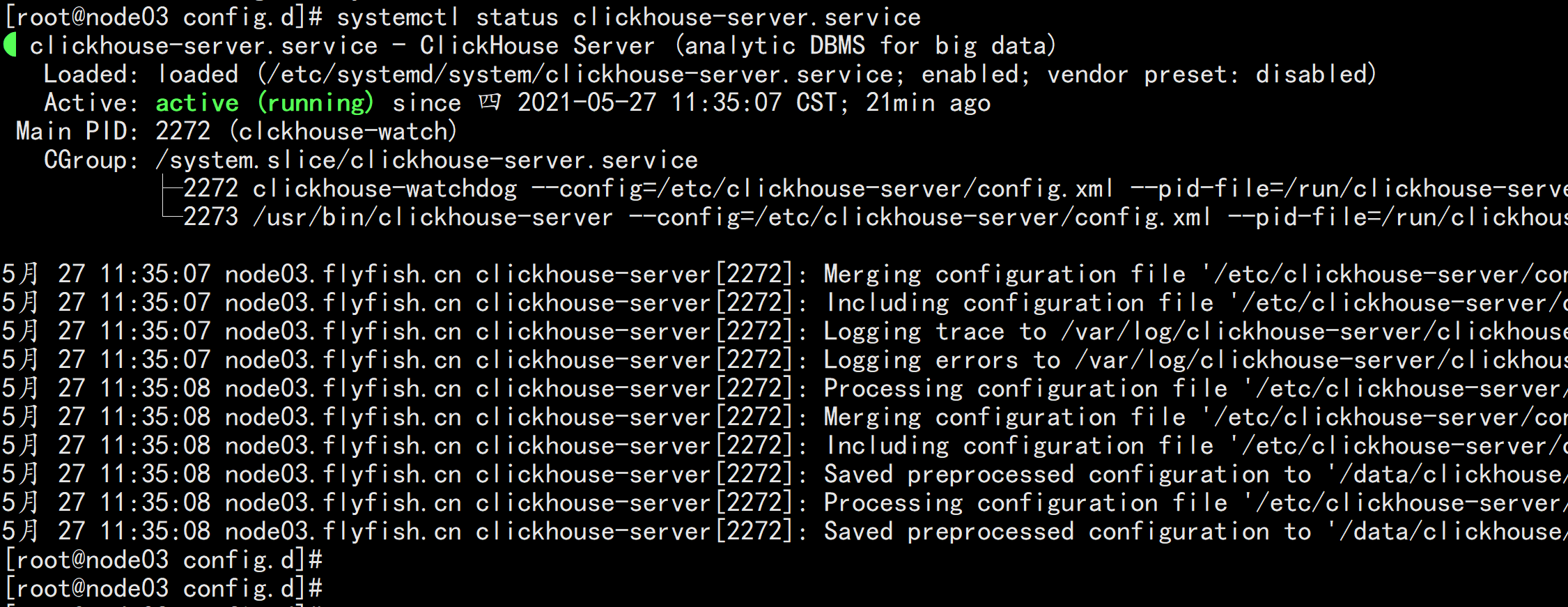

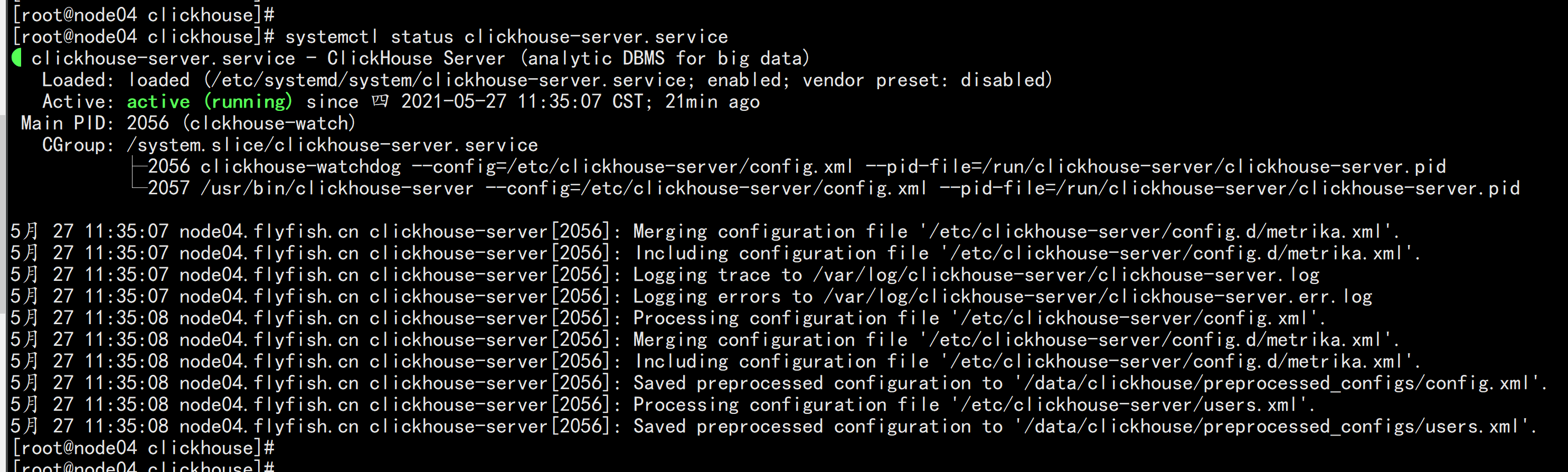

启动停止:

systemctl stop clickhouse-server.service 停机所有集群

systemctl start clickhouse-server.service 所有节点全部启动

systemctl status clickhouse-server.service 所有节点查看clickhouse 节点状态

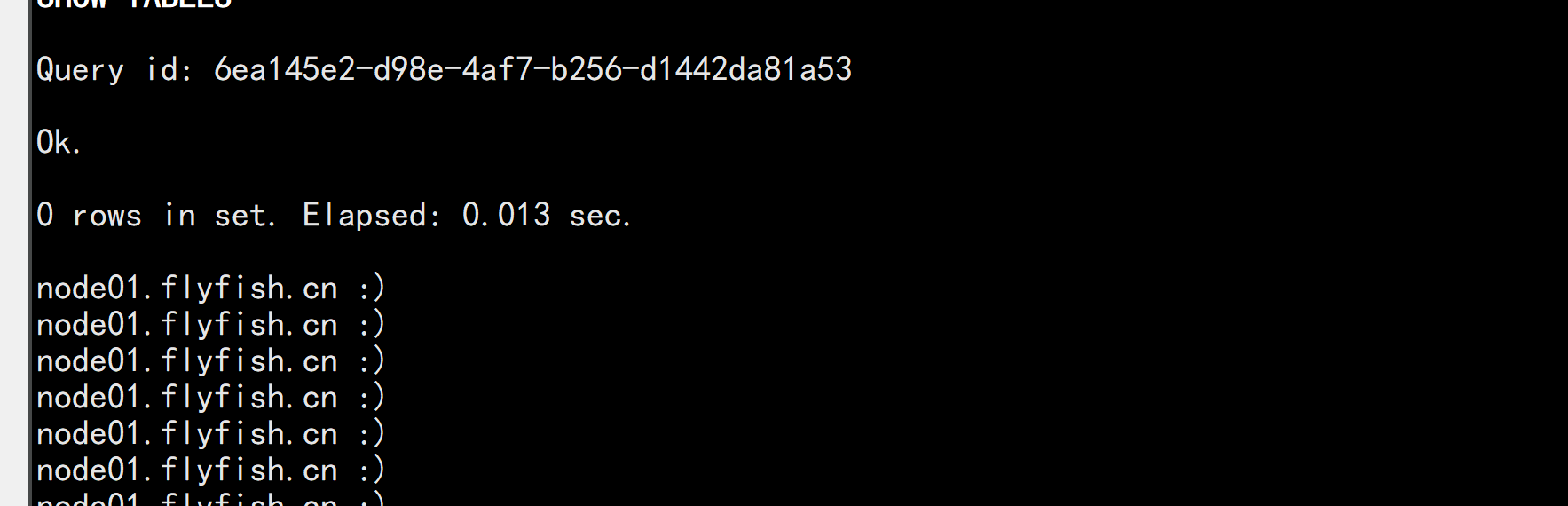

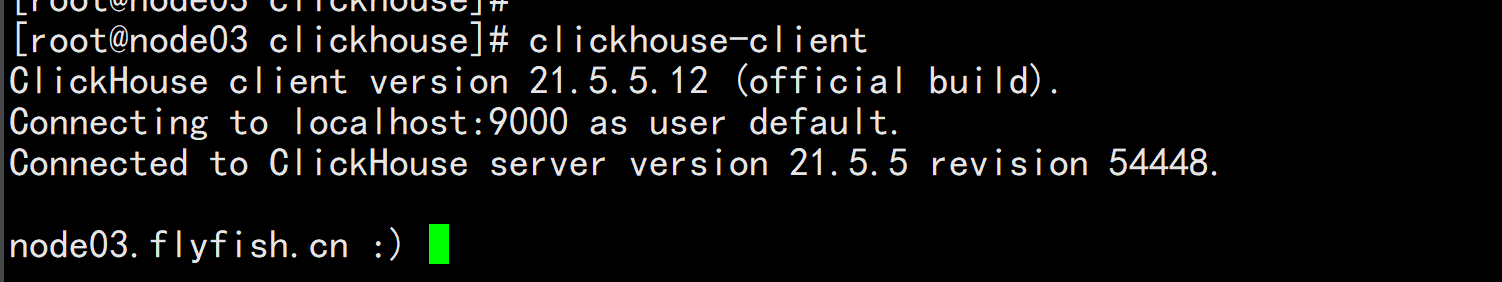

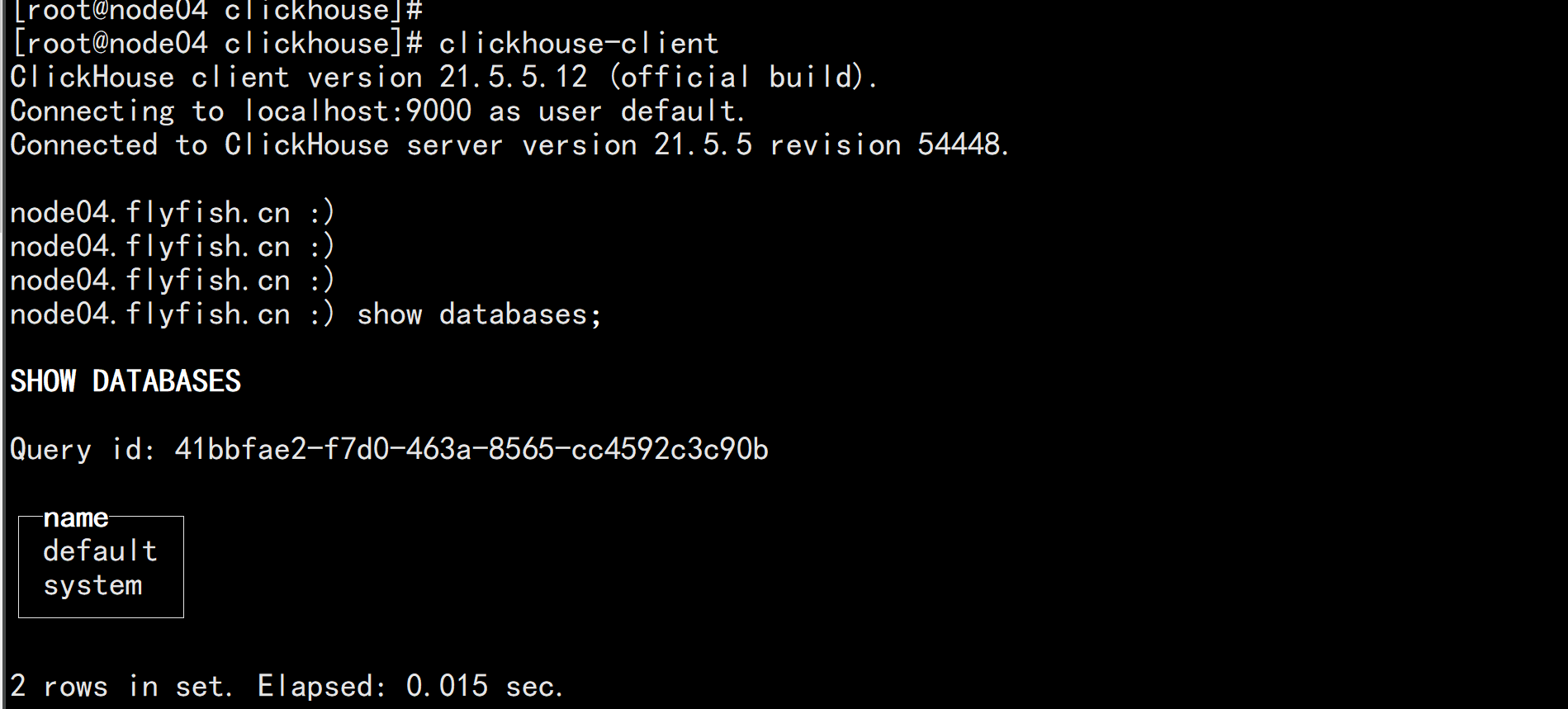

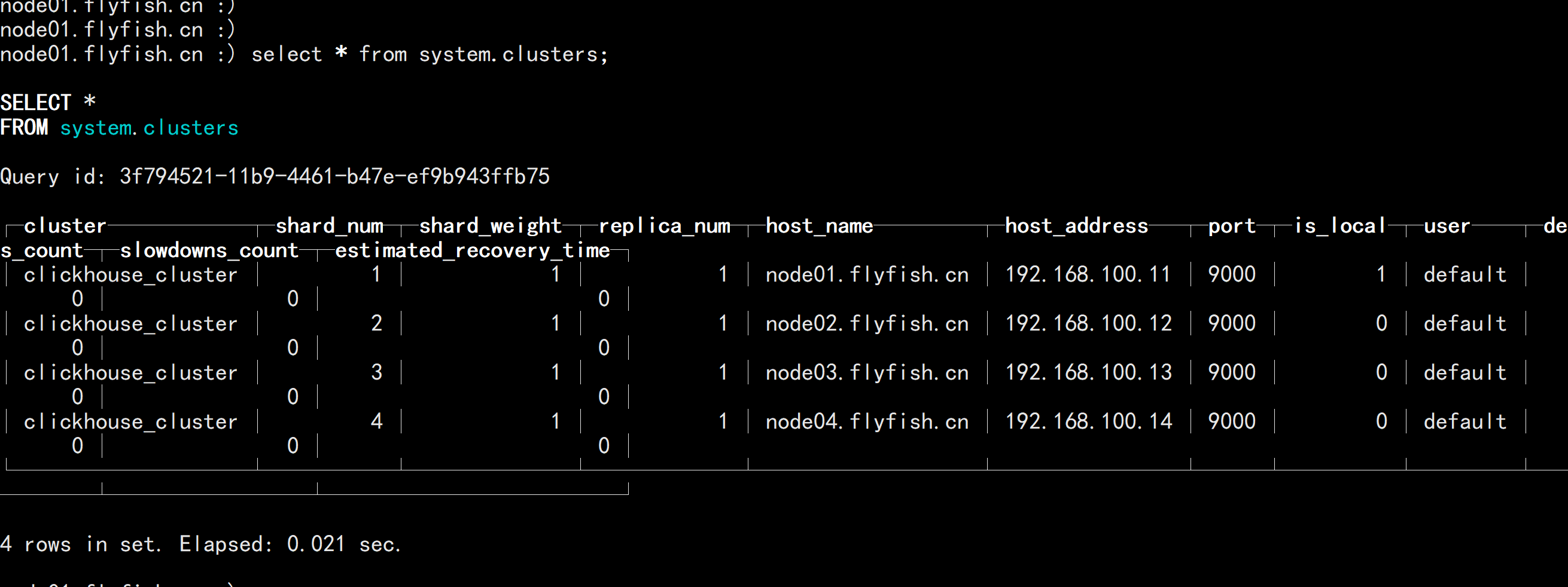

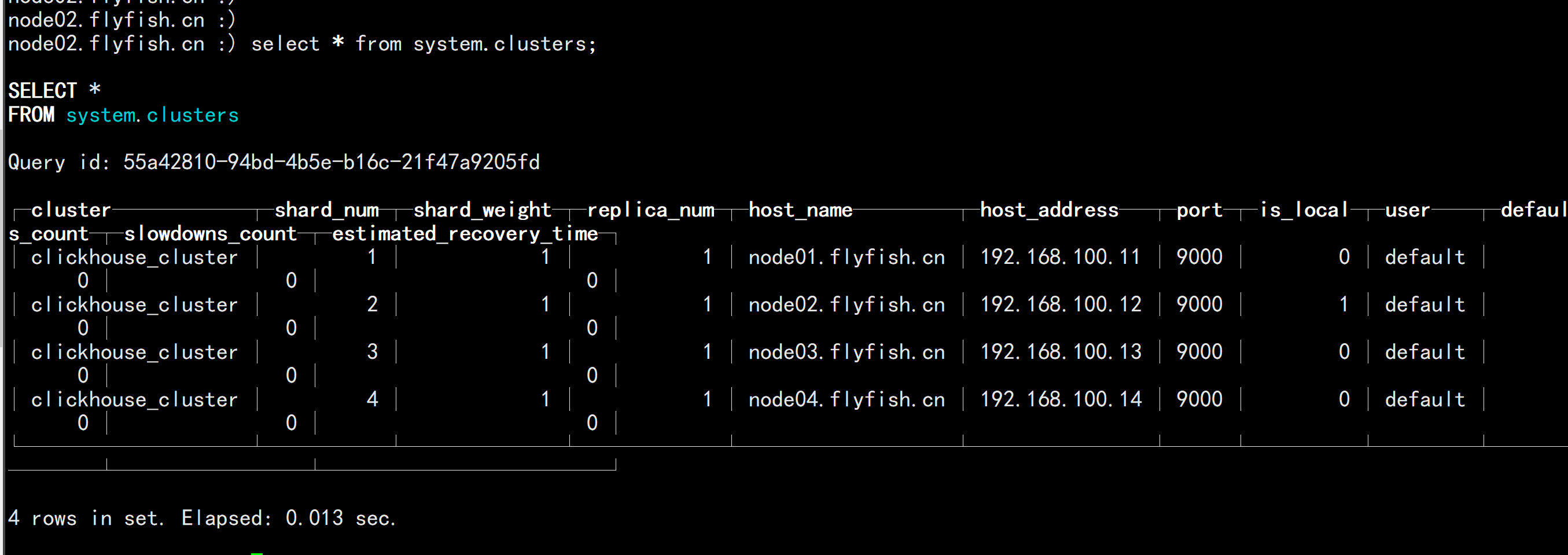

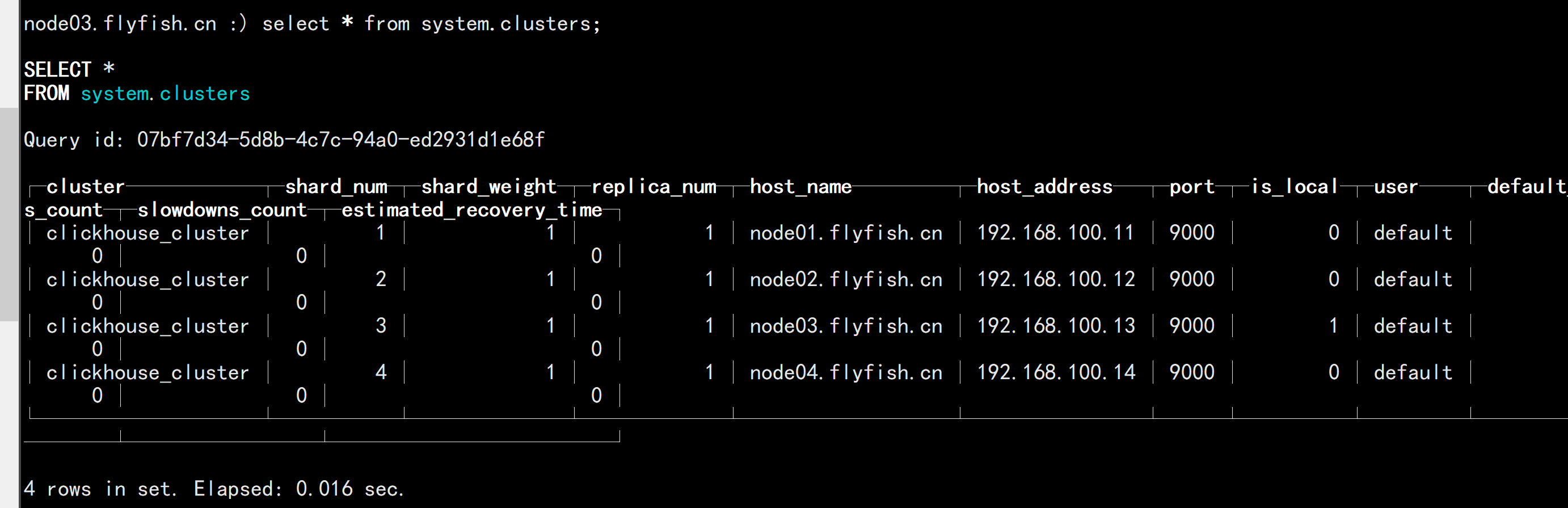

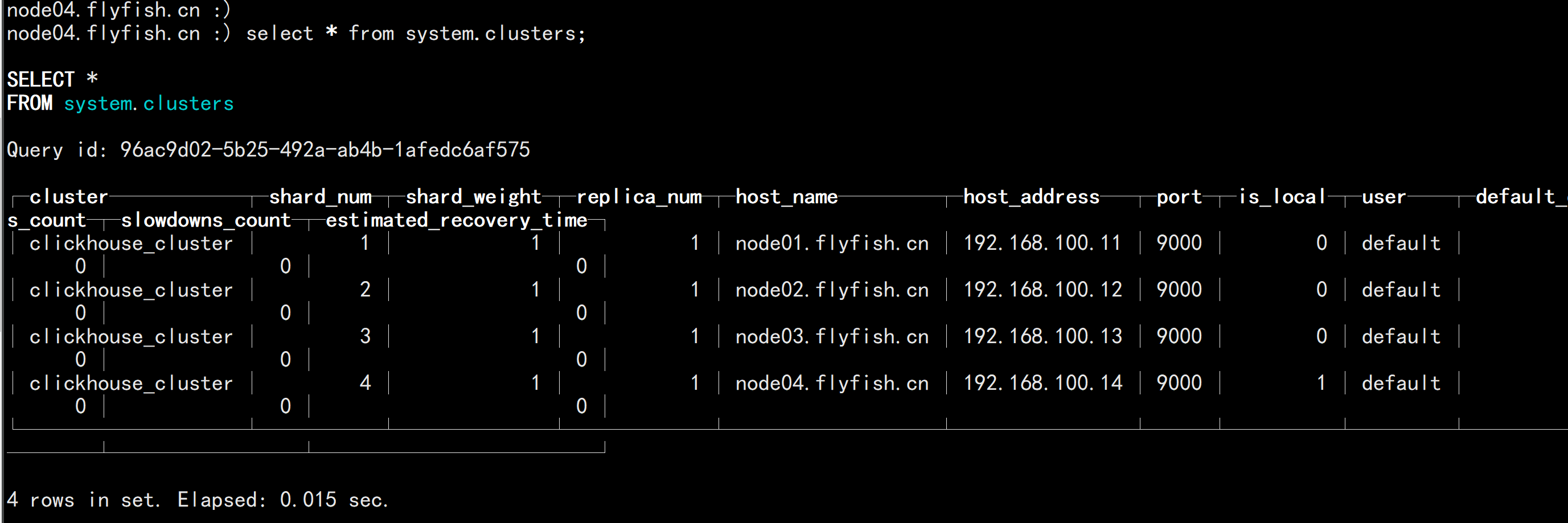

随便登录到其中一个节点:

clickhouse-client

select * from system.clusters;

标签:cn,zookeeper,server,etc,flyfish,集群,rpm,clickhouse 来源: https://blog.51cto.com/flyfish225/2821379