腾讯云原生在线技术工坊-202112

作者:互联网

腾讯云原生在线技术工坊-2021

[原文] https://tke-2gipdtus3676b965-1251009918.ap-shanghai.app.tcloudbase.com/docs/docker-start腾讯云原生在线技术工坊

第一章 Docker快速入门

(略)

第二章 Docker与命令行

(略)

第三章 Docker与编程语言

(略)

第四章 开源项目与部署

目标与任务:

-

了解Docker Volume卷挂载的使用;

-

了解如何使用Docker启动与连接MySQL数据库;

-

使用Docker搭建Ghost、wordpress开源项目;

-

注册腾讯云TKE并创建一个弹性集群(EKS);

-

掌握如何将镜像推送到腾讯云镜像仓库(个人免费版);

-

掌握如何通过可视化控制台的方式将Ghost、Wordpress等项目部署到EKS

4.1 文件与数据存储

4.1.1 todo demo

-

源代码下载:

wget https://hackweek-1251009918.cos.ap-shanghai.myqcloud.com/tke/d/getting-started-master.zip -

app代码结构:

[root@master-101 app]# pwd

/sunny/myTKE/app

[root@master-101 app]#

[root@master-101 app]# tree -L 1

.

├── package.json

├── spec

├── src

└── yarn.lock

2 directories, 2 files

[root@master-101 app]#

-

Dockerfile

[root@master-101 app]#

[root@master-101 app]# cat > Dockerfile

FROM node:12-alpine

RUN sed -i 's/dl-cdn.alpinelinux.org/mirrors.ustc.edu.cn/g' /etc/apk/repositories

RUN apk add --no-cache python3 g++ make

RUN yarn config set registry http://mirrors.cloud.tencent.com/npm/

WORKDIR /app

COPY . .

RUN yarn install --production

CMD ["node", "src/index.js"]

[root@master-101 app]# -

打包镜像

[root@master-101 app]# docker build -t tke-todo .

Sending build context to Docker daemon 4.663MB

Step 1/8 : FROM node:12-alpine

---> 106bb94759ad

Step 2/8 : RUN sed -i 's/dl-cdn.alpinelinux.org/mirrors.ustc.edu.cn/g' /etc/apk/repositories

---> Using cache

---> 82322ea41d10

Step 3/8 : RUN apk add --no-cache python3 g++ make

---> Using cache

---> 15e72162d811

Step 4/8 : RUN yarn config set registry http://mirrors.cloud.tencent.com/npm/

---> Using cache

---> d39f6f777582

Step 5/8 : WORKDIR /app

---> Using cache

---> 4d146fc8a328

Step 6/8 : COPY . .

---> Using cache

---> 8349068800c2

Step 7/8 : RUN yarn install --production

---> Using cache

---> 07cbb2cfcef5

Step 8/8 : CMD ["node", "src/index.js"]

---> Using cache

---> aece73128137

Successfully built aece73128137

Successfully tagged tke-todo:latest

[root@master-101 app]#

[root@master-101 app]# docker image ls | grep tke-todo

tke-todo latest aece73128137 7 days ago 395MB

[root@master-101 app]# -

运行容器 启动容器时遇到报错:

[root@master-101 app]# docker container run -dp 3001:3000 tke-todo

WARNING: IPv4 forwarding is disabled. Networking will not work.

d37bc9ed68ebdb0a5c711e891f2e3130ca5f21b0f98a747f2a1b0ea9bb2491c3

docker: Error response from daemon: driver failed programming external connectivity on endpoint thirsty_wright (3f3ea2ce1e6c7eb3e99f63df263d93ebc77793a178b0c681d732a080bb298242): (iptables failed: iptables --wait -t nat -A DOCKER -p tcp -d 0/0 --dport 3001 -j DNAT --to-destination 172.17.0.2:3000 ! -i docker0: iptables: No chain/target/match by that name.

(exit status 1)).

[root@master-101 ~]#

原因分析: docker服务启动时定义的自定义链docker由于某种原因被清掉。重启docker服务即可重新生成自定义链DOCKER

[root@master-101 ~]# systemctl restart docker

[root@master-101 ~]#

[root@master-101 app]# docker container run -dp 3001:3000 tke-todo

a9b522d82b2263ada943efbf0e54dd89c1d465c9cbfe58c1de5cd9ed63fd69ab

[root@master-101 app]#

[root@master-101 app]# docker container ls

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

a9b522d82b22 tke-todo "docker-entrypoint.s…" About a minute ago Up About a minute 0.0.0.0:3001->3000/tcp, :::3001->3000/tcp funny_spence

[root@master-101 app]#

4.1.2 存储卷Volume

容器并不会保存数据。数据才是我们应用的核心,那容器会怎样存储数据呢?容器以及Kubernetes等云原生技术存储数据的方式有很多,接下来我们就以此为例介绍一下Volume存储卷

数据不随着容器Container的结束而结束,这称之为数据的持久化。存储卷的生命周期不依赖于单个容器,它可以用来存储和共享应用产生的一些“动态”文件,比如数据库中的数据、日志、用户上传的文件、数据处理产生的数据等等。

-

创建存储卷

[root@master-101 app]# docker volume create todo-db

todo-db

[root@master-101 app]#

[root@master-101 app]# docker volume ls| grep todo-db

local todo-db

[root@master-101 app]#

[root@master-101 app]# docker volume inspect todo-db

[

{

"CreatedAt": "2021-12-31T12:42:41+08:00",

"Driver": "local",

"Labels": {},

"Mountpoint": "/var/lib/docker/volumes/todo-db/_data",

"Name": "todo-db",

"Options": {},

"Scope": "local"

}

]

[root@master-101 app]#

-

挂载存储卷

[root@master-101 app]# docker run -dp 3002:3000 -v todo-db:/etc/todos tke-todo

2d4ca045f6503b173206a65522f35001dd16fb0a4ab87c863442c11a83eea0d0

[root@master-101 app]#

[root@master-101 app]# docker container ls

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

2d4ca045f650 tke-todo "docker-entrypoint.s…" 9 seconds ago Up 8 seconds 0.0.0.0:3002->3000/tcp, :::3002->3000/tcp quizzical_dewdney

a9b522d82b22 tke-todo "docker-entrypoint.s…" 19 minutes ago Up 19 minutes 0.0.0.0:3001->3000/tcp, :::3001->3000/tcp funny_spence

[root@master-101 app]#

[root@master-101 app]# docker container inspect quizzical_dewdney | grep Mounts -A 10

"Mounts": [

{

"Type": "volume",

"Name": "todo-db",

"Source": "/var/lib/docker/volumes/todo-db/_data",

"Destination": "/etc/todos",

"Driver": "local",

"Mode": "z",

"RW": true,

"Propagation": ""

}

[root@master-101 app]#

使用存储卷不仅可以持久化容器的数据,还可以在多个容器里共享数据。

4.1.3 容器查看

docker ps #查看正在运行的容器列表,以及找到运行tke-todo的容器ID

docker exec -it <容器ID或name> /bin/sh #换成你的容器ID

4.2 Wordpress与数据库

-

开源项目Wordpress:Wordpress官方镜像

4.2.1 使用Docker创建MySQL

-

数据库MySQL:MySQL官方镜像

-

创建逻辑卷

docker volume create wordpress-mysql-data -

启动数据库容器

docker run -d \

--name tke-mysql \

-v wordpress-mysql-data:/var/lib/mysql \

-e MYSQL_ROOT_PASSWORD=tketke \

-e MYSQL_DATABASE=wordpress \

mysql:5.7

4.2.2 docker安装wordpress

通常情况下,我们不会用一个容器同时启动wordpress和mysql,而是将这两个服务用不同的容器启动,那两个不同的容器,它们之间怎样才能连接到一起呢?这就需要用到网络了。

-

创建网络

在终端中输入以下命令,创建一个网络,名为

wordpress-net:

docker network create wordpress-net

-

桥接

然后再在终端执行以下命令,将之前创建好的

tke-mysql桥接到wordpress-net网络docker network connect wordpress-net tke-mysql

-

查看tke-mysql容器IP信息

docker inspect -f '{{ $network := index .NetworkSettings.Networks "wordpress-net" }}{{ $network.IPAddress}}' tke-mysql -

启动wordpress

假设上面查到的tke-mysql的IP为172.20.0.2

docker run -dp 8008:80 \

--name tke-wordpress \

--network wordpress-net \

-v wordpress_data:/var/www/html \

-e WORDPRESS_DB_HOST=172.20.0.2:3306 \

-e WORDPRESS_DB_USER=root \

-e WORDPRESS_DB_PASSWORD=tketke \

-e WORDPRESS_DB_NAME=wordpress \

wordpress

4.3 腾讯云容器服务TKE

4.3.1 新建私有网络和子网

在选择IPv4的CIDR时,由于控制台分配好了输入的逻辑,一般来说只要你最后一位数字不要太大导致IP数量过少即可。一个私有网络VPC可以同时拥有多个子网(默认配置为100个),相同私有网络下不同子网默认内网互通。一般来说尽量给不同的私有网络规划不同的网段,确保相同私有网络的子网网段不同。私有网络和子网的网段掩码一旦设定后,则无法修改。在创建子网时,要保证子网网段的IP容量满足需求(剩余IP数量控制台会实时显示)。比如私有网络网段为10.0.0.0/16,那可以选择10.0.0.0/16到10.0.255.255/28之间的网段作为子网网段。

4.3.2 创建EKS弹性集群

4.4 镜像仓库

4.5 使用EKS快速部署应用

-

小知识

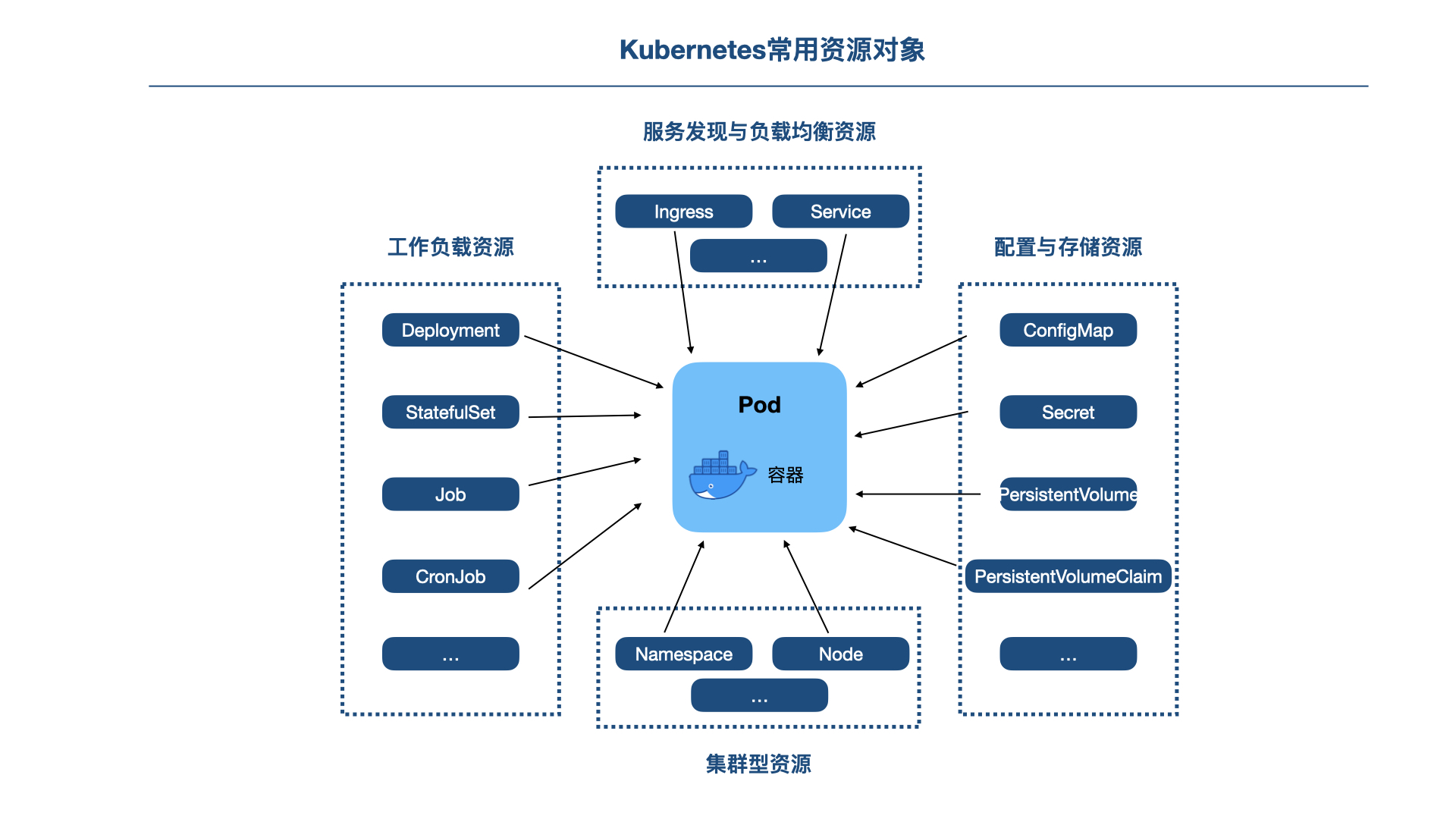

镜像要怎么操作才能在Kubernetes集群上运行呢?在大多数情况下,会使用工作负载如Deployment来创建Pod,而Pod类似于豌豆荚,容器就像豌豆荚里的一颗“豌豆”。Deployment声明了Pod的模板和控制Pod的运行策略,适用于部署无状态的应用程序。我们可以根据业务需求,对Deployment中运行的Pod的副本数、调度策略、更新策略等进行声明。

-

deployment

-

service

-

nginx

-

负载均衡

第五章 k8s快速入门

本章目标与任务

-

使用minikube在本地搭建kubernetes集群;

-

了解本地Kubernetes和EKS的Dashboard,并通过Dashboard来了解Kubernetes的基础概念;

-

掌握如何使用Kubectl连接并查看EKS,了解基础的Kubectl的命令;

-

通过实战了解Node、Pod、容器之间的关系;

本章已centos 7平台为例。

5.1 安装必备工具-安装kubectl与minikube

minikube和kubectl的安装教程:

[minikube 和kubectl] https://kubernetes.io/zh/docs/tasks/tools/

5.2 在本地创建Kubernetes集群

-

minikube start

执行命令时可能会报错,如下,需要加上参数 --force

[root@master-101 ~]# minikube start

* minikube v1.24.0 on Centos 7.6.1810

* Using the docker driver based on existing profile

* The "docker" driver should not be used with root privileges.

* If you are running minikube within a VM, consider using --driver=none:

* https://minikube.sigs.k8s.io/docs/reference/drivers/none/

* Tip: To remove this root owned cluster, run: sudo minikube delete

X Exiting due to DRV_AS_ROOT: The "docker" driver should not be used with root privileges.

[root@master-101 ~]#

[root@master-101 ~]# minikube start --force

* minikube v1.24.0 on Centos 7.6.1810

! minikube skips various validations when --force is supplied; this may lead to unexpected behavior

* Using the docker driver based on existing profile

* The "docker" driver should not be used with root privileges.

* If you are running minikube within a VM, consider using --driver=none:

* https://minikube.sigs.k8s.io/docs/reference/drivers/none/

* Tip: To remove this root owned cluster, run: sudo minikube delete

X The requested memory allocation of 1980MiB does not leave room for system overhead (total system memory: 1980MiB). You may face stability issues.

* Suggestion: Start minikube with less memory allocated: 'minikube start --memory=1980mb'

* Starting control plane node minikube in cluster minikube

* Pulling base image ...

* Restarting existing docker container for "minikube" ...

! This container is having trouble accessing https://k8s.gcr.io

* To pull new external images, you may need to configure a proxy: https://minikube.sigs.k8s.io/docs/reference/networking/proxy/

* Preparing Kubernetes v1.22.3 on Docker 20.10.8 ...

* Verifying Kubernetes components...

- Using image gcr.io/k8s-minikube/storage-provisioner:v5

- Using image kubernetesui/dashboard:v2.3.1

- Using image kubernetesui/metrics-scraper:v1.0.7

* Enabled addons: default-storageclass, storage-provisioner, dashboard

* Done! kubectl is now configured to use "minikube" cluster and "default" namespace by default

[root@master-101 ~]#

-

minikube dashboard

由于我使用的是win10上面VM的centos7,且为无UI版本,所以即使dashboard启动后,也不能通过browser打开,可以使用proxy实现在win10 host机器上访问。

-

ssh 1

[root@master-101 ~]# minikube dashboard

* Verifying dashboard health ...

* Launching proxy ...

* Verifying proxy health ...

http://127.0.0.1:44662/api/v1/namespaces/kubernetes-dashboard/services/http:kubernetes-dashboard:/proxy/

-

ssh 2

[root@master-101 ~]# kubectl get po -A

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system coredns-78fcd69978-5vbww 1/1 Running 3 (3d8h ago) 7d20h

kube-system etcd-minikube 1/1 Running 3 (3d8h ago) 7d20h

kube-system kube-apiserver-minikube 1/1 Running 3 (5m7s ago) 7d20h

kube-system kube-controller-manager-minikube 1/1 Running 3 (3d8h ago) 7d20h

kube-system kube-proxy-42lp8 1/1 Running 3 (3d8h ago) 7d20h

kube-system kube-scheduler-minikube 1/1 Running 3 (5m7s ago) 7d20h

kube-system storage-provisioner 1/1 Running 9 (4m4s ago) 7d20h

kubernetes-dashboard kubernetes-dashboard-654cf69797-d9lm9 1/1 Running 7 (4m4s ago) 7d20h

[root@master-101 ~]#

-

查看dashboard端口信息,9090

kubectl describe po kubernetes-dashboard-654cf69797-d9lm9 -n kubernetes-dashboard

...

Containers:

kubernetes-dashboard:

Container ID: docker://7e412ac399215a8e210aff0609ee3a83d2e720cf184471573503c0d2ab97bcf1

Image: kubernetesui/dashboard:v2.3.1@sha256:ec27f462cf1946220f5a9ace416a84a57c18f98c777876a8054405d1428cc92e

Image ID: docker-pullable://kubernetesui/dashboard@sha256:ec27f462cf1946220f5a9ace416a84a57c18f98c777876a8054405d1428cc92e

Port: 9090/TCP

Host Port: 0/TCP

...-

proxy

通过以下命令,使其它主机访问就需要指定监听的地址:

kubectl proxy --address=0.0.0.0 --accept-hosts='^*$' --port=9090

或者命令行:

curl http://192.168.1.101:9090/api/v1/namespaces/kubernetes-dashboard/services/kubernetes-dashboard/proxy -L

-

5.3 通过Kubectl连接Kubernetes集群

5.3.1 配置kubeconfig

[root@master-101 ~]# cd ~/.kube/

[root@master-101 .kube]#

[root@master-101 .kube]# ll

total 4

drwxr-x---. 4 root root 35 Dec 27 01:00 cache

-rw-------. 1 root root 806 Jan 3 21:04 config

[root@master-101 .kube]# cat config

apiVersion: v1

clusters:

- cluster:

certificate-authority: /root/.minikube/ca.crt

extensions:

- extension:

last-update: Mon, 03 Jan 2022 21:04:28 CST

provider: minikube.sigs.k8s.io

version: v1.24.0

name: cluster_info

server: https://192.168.49.2:8443

name: minikube

contexts:

- context:

cluster: minikube

extensions:

- extension:

last-update: Mon, 03 Jan 2022 21:04:28 CST

provider: minikube.sigs.k8s.io

version: v1.24.0

name: context_info

namespace: default

user: minikube

name: minikube

current-context: minikube

kind: Config

preferences: {}

users:

- name: minikube

user:

client-certificate: /root/.minikube/profiles/minikube/client.crt

client-key: /root/.minikube/profiles/minikube/client.key

[root@master-101 .kube]#

[root@master-101 .kube]# cp config{,.orig}

[root@master-101 .kube]#

5.3.2 使用kubectl连接EKS

默认情况下,kubectl在$HOME/.kube目录下查找名为config的文件。我们可以通过设置KUBECONFIG环境变量或者设置--kubeconfig参数来指定其他 kubeconfig文件。

kubectl config view #显示合并后的kubeconfig设置,或者显示指定的kubeconfig配置文件。

kubectl config get-contexts #查看kubeconfig中的环境

[root@master-101 .kube]# kubectl config view --kubeconfig ~/.kube/config_tke

apiVersion: v1

clusters:

- cluster:

certificate-authority-data: DATA+OMITTED

server: https://111.231.210.222:443/

name: cls-rh4p9mpp

contexts:

- context:

cluster: cls-rh4p9mpp

user: "100010274107"

name: cls-rh4p9mpp-100010274107-context-default

current-context: cls-rh4p9mpp-100010274107-context-default

kind: Config

preferences: {}

users:

- name: "100010274107"

user:

client-certificate-data: REDACTED

client-key-data: REDACTED

[root@master-101 .kube]#

[root@master-101 .kube]# kubectl config get-contexts

CURRENT NAME CLUSTER AUTHINFO NAMESPACE

* minikube minikube minikube default

[root@master-101 .kube]#

[root@master-101 .kube]# kubectl config get-contexts --kubeconfig ~/.kube/config_tke

CURRENT NAME CLUSTER AUTHINFO NAMESPACE

* cls-rh4p9mpp-100010274107-context-default cls-rh4p9mpp 100010274107

[root@master-101 .kube]#

[root@master-101 .kube]# kubectl config get-contexts

CURRENT NAME CLUSTER AUTHINFO NAMESPACE

* minikube minikube minikube default

[root@master-101 .kube]#

其中*表示是当前的环境,我们可以通过以下命令来切换到云端的环境,也可以实现本地集群和云端集群的来回切换:

kubectl config use-context cls-****-context-default #切换到云端K8s集群

kubectl config use-context minikube #切换到本地集群

kubectl config use-context cls-****-context-default #切换回云端集群

5.4 Dashboard与命令行

5.4.1 关于Node

kubectl get nodes #获取节点列表

kubectl describe node <node的名称> #了解节点的所有状态

kubectl top node <node的名称> #了解节点的内存和CPU使用情况

5.4.2 关于Pod

kubectl get pods -o wide #显示Pod列表

kubectl describe pod <pod的名称> #查看Pod的详细信息

kubectl top pod <pod的名称>

5.4.3 关于工作负载Deployment

kubectl get deployment #获取所有的Deployment

kubectl describe deployment <deployment的名称>

5.4.4 关于命名空间Namespace

kubectl get namespaces

5.4.5 服务service

kubectl get services

kubectl describe service <service的名称>

5.5 管理正在运行的Pod

kubectl get pods

kubectl logs <pod的名称>

kubectl exec -it <pod的名称> -- /bin/bash

# kubectl exec --stdin --tty <pod的名称> -- /bin/sh ,推荐使用bash

第六章 K8S应用部署

在生产实践中,直接使用命令行会让命令不可追溯以及支持的功能属性会比较有限,建议使用Dockerfile来解耦。管理Kubernetes也是一样,控制台、命令行、yaml配置文件可以结合来了解。

-

了解yaml配置文件与Kubernetes对象之间的关系;

-

掌握基础的yaml语法,尤其是数据结构Lists和Maps;

-

了解如何通过yaml文件创建一个Deployment;

-

使用yaml配置文件部署Wordpress;

6.1 yaml与Kubernetes

6.1.1 了解现有的yaml描述文件

-

获取到每个实例的yaml配置文件:

kubectl get namespaces

kubectl get namespace <namespace的名称> -o yaml

kubectl get nodes

kubectl get node <node的名称> -o yaml

kubectl get pods

kubectl get pod <pod的名称> -o yaml

kubectl get deployments

kubectl get deployment <deployment的名称> -o yaml

kubectl get services

kubectl get service <service的名称> -o yaml

-

k8s常用资源对象

-

查询Kubernetes API支持的API版本以及资源对象

kubectl api-versions

kubectl api-resources

kubectl api-resources -o wide #可以了解不同的资源对象的API版本、kind类型、资源对象的简称等

6.1.2 Kubernetes对象与yaml

Kubernetes对象的每一份yaml文件一般都会包含5个部分的内容:

-

apiVersion:该对象所使用的Kubernetes API的版本,不同的Kubernetes版本和资源对象类型,这个值会有所不同,常见的有v1、apps/v1等; -

kind:对象的类别,资源类型可以是Deployment、Namespace、Service、PersistentVolume等; -

metadata:对象的帮助唯一性标识对象的一些数据,比如名称、uid、标签以及和关于该对象的其他信息; -

spec:对象的规约,你所期望的该对象的状态,包括一些container,storage,volume以及其他Kubernetes需要的参数,以及诸如是否在容器失败时重新启动容器的属性。 -

status:对象的状态,描述了对象的当前状态(Current State),它是由 Kubernetes系统和组件设置并更新的。

重要

spec和status是Kubernetes对象的核心,Kubernetes不同的资源对象有着不同且丰富的参数,尤其是spec和status,而每个对象到底有哪些参数,参数值有哪些规则,参数具体又是什么含义等等,这些无论是在学习还是使用时都很有必要参考官方文档:

yaml配置参考:Kubernetes API,注意这个文档目前没有翻译成中文,中文状态下会看不到内容。

平时也可以使用kubectl explain命令来了解API对象有哪些属性:

kubectl explain deployments

kubectl explain pods

kubectl explain pods.spec

kubectl explain pods.spec.containers

6.2 yaml语法快速入门

-

大小写敏感;

-

使用缩进表示层级关系;

-

缩进时只能使用空格,不能使用Tab键;

-

缩进的空格数量不重要,只要相同层级的元素左侧对齐即可;

-

#表示注释,从这个字符一直到行尾,都会被解析器忽略;

6.2.2 使用yaml配置文件部署nginx

[root@master-101 myTKE]# cat nginx-deployment.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deployment

spec:

selector:

matchLabels:

app: nginx

replicas: 2 # 创建2个副本

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx:1.14.2

ports:

- containerPort: 80

[root@master-101 myTKE]#

[root@master-101 myTKE]# kubectl get po -A --kubeconfig ~/.kube/config_tke

NAMESPACE NAME READY STATUS RESTARTS AGE

default nginx-6b489dcb49-4rts7 1/1 Running 0 73m

kube-system coredns-6599bd548d-jwxz5 1/1 Running 0 85m

kube-system coredns-6599bd548d-jxncc 1/1 Running 0 85m

[root@master-101 myTKE]#

[root@master-101 myTKE]# kubectl apply -f ./nginx-deployment.yaml --kubeconfig ~/.kube/config_tke

deployment.apps/nginx-deployment created

[root@master-101 myTKE]#

[root@master-101 myTKE]# kubectl get po -A --kubeconfig ~/.kube/config_tke

NAMESPACE NAME READY STATUS RESTARTS AGE

default nginx-6b489dcb49-4rts7 1/1 Running 0 73m

default nginx-deployment-66b6c48dd5-hd2xc 0/1 Pending 0 7s

default nginx-deployment-66b6c48dd5-kbfdj 0/1 Pending 0 7s

kube-system coredns-6599bd548d-jwxz5 1/1 Running 0 85m

kube-system coredns-6599bd548d-jxncc 1/1 Running 0 85m

[root@master-101 myTKE]#

[root@master-101 myTKE]#

[root@master-101 myTKE]# kubectl get po -A --kubeconfig ~/.kube/config_tke

NAMESPACE NAME READY STATUS RESTARTS AGE

default nginx-6b489dcb49-4rts7 1/1 Running 0 74m

default nginx-deployment-66b6c48dd5-hd2xc 1/1 Running 0 33s

default nginx-deployment-66b6c48dd5-kbfdj 1/1 Running 0 33s

kube-system coredns-6599bd548d-jwxz5 1/1 Running 0 86m

kube-system coredns-6599bd548d-jxncc 1/1 Running 0 86m

[root@master-101 myTKE]#

[root@master-101 myTKE]# kubectl describe deploy nginx-deployment --kubeconfig ~/.kube/config_tke

Name: nginx-deployment

Namespace: default

CreationTimestamp: Mon, 03 Jan 2022 22:00:02 +0800

Labels: <none>

Annotations: deployment.kubernetes.io/revision: 1

Selector: app=nginx

Replicas: 2 desired | 2 updated | 2 total | 2 available | 0 unavailable

StrategyType: RollingUpdate

MinReadySeconds: 0

RollingUpdateStrategy: 25% max unavailable, 25% max surge

Pod Template:

Labels: app=nginx

Containers:

nginx:

Image: nginx:1.14.2

Port: 80/TCP

Host Port: 0/TCP

Environment: <none>

Mounts: <none>

Volumes: <none>

Conditions:

Type Status Reason

---- ------ ------

Available True MinimumReplicasAvailable

Progressing True NewReplicaSetAvailable

OldReplicaSets: <none>

NewReplicaSet: nginx-deployment-66b6c48dd5 (2/2 replicas created)

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal ScalingReplicaSet 2m29s deployment-controller Scaled up replica set nginx-deployment-66b6c48dd5 to 2

[root@master-101 myTKE]#

6.2.3 kubectl apply

-

Kubernetes对象管理的方式有三种,指令式命令、指令式对象配置以及声明式对象配置,推荐使用声明式对象配置。关于这三种方式的比较,可以参考技术文档:Kubernetes 对象管理

#指令式命令,常用命令有run、create、scale、edit、patch、expose等等

kubectl create deployment nginx --image nginx

#指令式对象配置,通用格式为kubectl create|delete|replace|get -f <filename|url>

kubectl create -f nginx.yaml

#声明式对象配置,资源对象的创建、删除以及修改等操作都是通过kubectl apply来实现,它通过对比检查活动对象的当前状态、配置信息等进行更新

kubectl apply -f configs/nginx.yaml

-

kubectl apply -f不仅可以创建资源对象,还可以进行删除、修改等操作,比如将上面deployment.yaml里的replicas的值由2改成5再来部署,就会发现副本的数量也增加到了5个:

kubectl apply -f ./deployment.yaml

kubectl get deployments

kubectl get pods

-

kubectl apply -f不仅支持单个文件,还支持多个文件、文件夹以及外部链接:

# 通过单个文件创建资源对象

kubectl apply -f ./my-manifest.yaml

# 通过多个文件创建资源对象

kubectl apply -f ./my1.yaml -f ./my2.yaml

# 通过文件夹创建资源对象

kubectl apply -f ./dir

# 通过外部链接创建资源对象

kubectl apply -f https://k8s.io/examples/controllers/nginx-deployment.yaml

6.3 网络、服务与负载均衡

6.3.1 端口转发访问集群中的应用

在前面,我们已经使用kubectl apply在本地集群创建了nginx的服务,那怎样才能访问它呢?可以使用kubectl port-forward命令通过端口转发映射本地端口到指定的pod端口,从而访问集群中的应用程序(Pod),kubectl port-forward语法如下:

kubectl port-forward <资源类型/资源名称> [本地端口]:<pod的端口>

我们以之前创建的Nginx为例,在命令行终端输入以下命令,然后就可以在浏览器里输入localhost:5001访问nginx了:

kubectl get deployments #获取deployment的名称列表

kubectl port-forward deployment/nginx-deployment 5001:80

6.3.2 创建服务Service

[root@master-101 myTKE]#

[root@master-101 myTKE]# cat nginx-service.yaml

apiVersion: v1

kind: Service

metadata:

name: nginx-service

labels:

app: nginx

spec:

type: NodePort

selector:

app: nginx

ports:

- protocol: TCP

port: 80

targetPort: 80

[root@master-101 myTKE]#

[root@master-101 myTKE]#

[root@master-101 myTKE]# kubectl get service --kubeconfig ~/.kube/config_tke

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 192.168.0.1 <none> 443/TCP 94m

nginx LoadBalancer 192.168.2.187 111.231.210.221 12380:30532/TCP 82m

[root@master-101 myTKE]#

[root@master-101 myTKE]# kubectl apply -f ./nginx-service.yaml --kubeconfig ~/.kube/config_tke

service/nginx-service created

[root@master-101 myTKE]#

[root@master-101 myTKE]# kubectl get service --kubeconfig ~/.kube/config_tke

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 192.168.0.1 <none> 443/TCP 95m

nginx LoadBalancer 192.168.2.187 111.231.210.221 12380:30532/TCP 83m

nginx-service NodePort 192.168.2.189 <none> 80:32058/TCP 3s

[root@master-101 myTKE]#

6.3.3 让公网可以访问

[root@master-101 myTKE]# cat nginx-lb.yaml

apiVersion: v1

kind: Service

metadata:

name: nginx-lb

namespace: default

spec:

ports:

- name: tcp-80-80

port: 80

protocol: TCP

targetPort: 80

selector:

app: nginx

type: LoadBalancer

[root@master-101 myTKE]#

[root@master-101 myTKE]# kubectl apply -f ./nginx-lb.yaml --kubeconfig ~/.kube/config_tke

service/nginx-lb created

[root@master-101 myTKE]#

[root@master-101 myTKE]# kubectl get service --kubeconfig ~/.kube/config_tke

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 192.168.0.1 <none> 443/TCP 97m

nginx LoadBalancer 192.168.2.187 111.231.210.221 12380:30532/TCP 85m

nginx-lb LoadBalancer 192.168.15.228 <pending> 80:32018/TCP 7s

nginx-service NodePort 192.168.2.189 <none> 80:32058/TCP 2m26s

[root@master-101 myTKE]#

[root@master-101 myTKE]# kubectl get service --kubeconfig ~/.kube/config_tke

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 192.168.0.1 <none> 443/TCP 97m

nginx LoadBalancer 192.168.2.187 111.231.210.221 12380:30532/TCP 85m

nginx-lb LoadBalancer 192.168.15.228 <pending> 80:32018/TCP 12s

nginx-service NodePort 192.168.2.189 <none> 80:32058/TCP 2m31s

[root@master-101 myTKE]#

[root@master-101 myTKE]# kubectl get service --kubeconfig ~/.kube/config_tke

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 192.168.0.1 <none> 443/TCP 97m

nginx LoadBalancer 192.168.2.187 111.231.210.221 12380:30532/TCP 85m

nginx-lb LoadBalancer 192.168.15.228 139.155.65.80 80:32018/TCP 20s

nginx-service NodePort 192.168.2.189 <none> 80:32058/TCP 2m39s

[root@master-101 myTKE]#

6.4 k8s 存储

6.4.1 创建腾讯云CFS

6.4.2 创建PV以及PVC

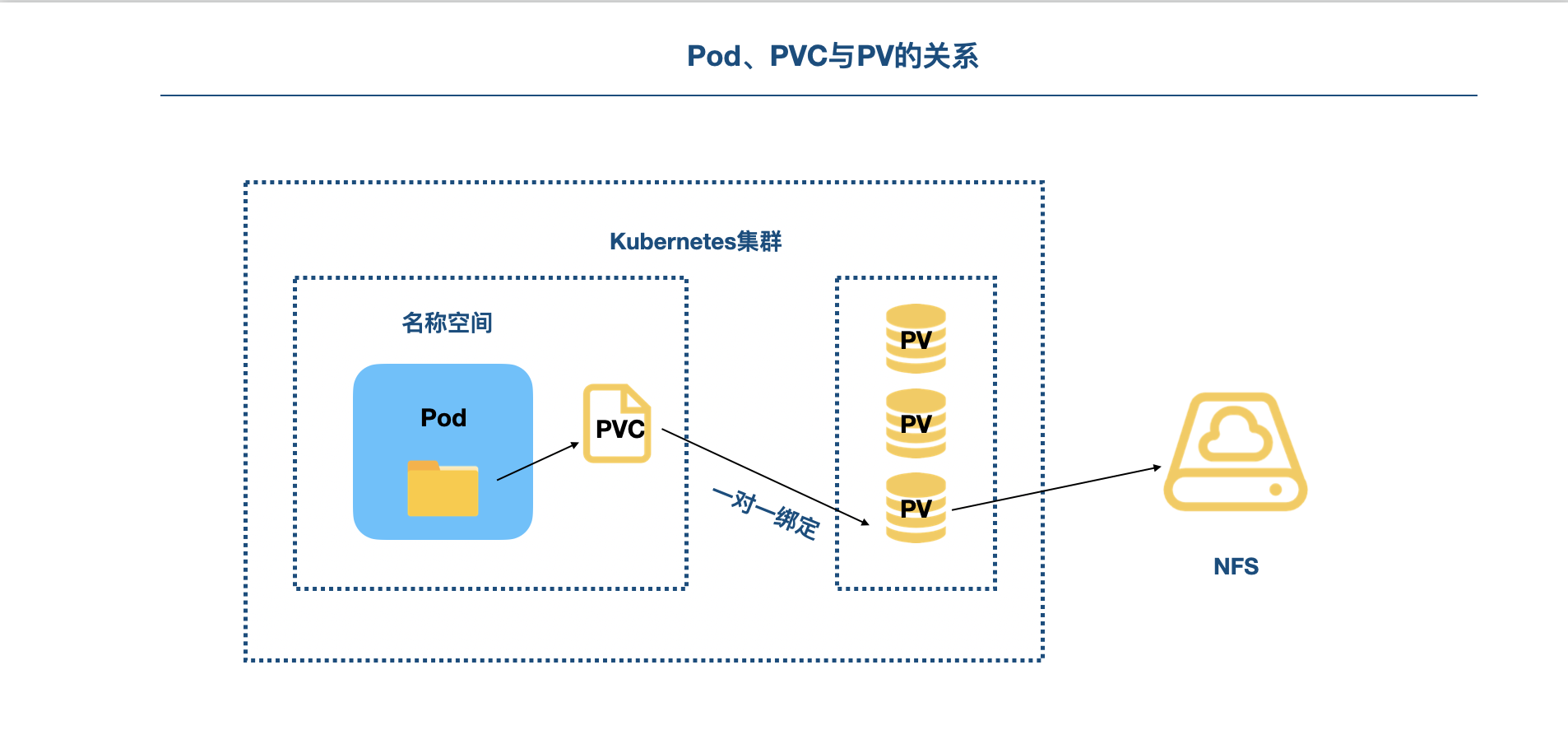

持久卷(PersistentVolume,PV)是集群中的一块存储,可以由管理员事先供应,持久卷是集群资源,就像节点Node也是集群资源一样。PV持久卷和普通的Volume一样,也是使用卷插件来实现的,只是它们拥有独立于任何使用 PV 的 Pod 的生命周期。而持久卷申领(PersistentVolumeClaim,PVC)表达的是用户对存储的请求,PVC申领会耗用PV资源。

pod, pv和pvc关系:

[root@master-101 myTKE]#

[root@master-101 myTKE]# cat cfs.yaml

apiVersion: v1

kind: PersistentVolume

metadata:

name: cfs

spec:

capacity:

storage: 10Gi

volumeMode: Filesystem

accessModes:

- ReadWriteMany

persistentVolumeReclaimPolicy: Retain

mountOptions:

- hard

- nfsvers=4

nfs:

path: /

server: 10.0.255.251 #换成你的文件存储CFS的IP地址

[root@master-101 myTKE]#

[root@master-101 myTKE]# kubectl get pv --kubeconfig ~/.kube/config_tke

No resources found

[root@master-101 myTKE]#

[root@master-101 myTKE]# kubectl apply -f ./cfs.yaml --kubeconfig ~/.kube/config_tke

persistentvolume/cfs created

[root@master-101 myTKE]#

[root@master-101 myTKE]# kubectl get pv --kubeconfig ~/.kube/config_tke

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

cfs 10Gi RWX Retain Available 3s

[root@master-101 myTKE]#

[root@master-101 myTKE]# cat cfsclaim.yaml

kind: PersistentVolumeClaim

apiVersion: v1

metadata:

name: cfsclaim

spec:

accessModes:

- ReadWriteMany

volumeMode: Filesystem

storageClassName: ""

resources:

requests:

storage: 10Gi

[root@master-101 myTKE]#

[root@master-101 myTKE]# kubectl get pv --kubeconfig ~/.kube/config_tke

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

cfs 10Gi RWX Retain Bound default/cfsclaim 63s

[root@master-101 myTKE]#

6.4.3 挂载存储并访问应用

[root@master-101 myTKE]# cat tke-todo.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: tke-todo-deployment

labels:

k8s-app: tke-todo

qcloud-app: tke-todo

spec:

selector:

matchLabels:

k8s-app: tke-todo

qcloud-app: tke-todo

replicas: 1

template:

metadata:

labels:

k8s-app: tke-todo

qcloud-app: tke-todo

spec:

containers:

- name: tketodo

image: ccr.ccs.tencentyun.com/tkegroup/tke-start:1.0.1

ports:

- containerPort: 3000

volumeMounts:

- mountPath: "/etc/todos"

name: mypd

volumes:

- name: mypd

persistentVolumeClaim:

claimName: cfsclaim

imagePullSecrets:

- name: qcloudregistrykey

[root@master-101 myTKE]#

[root@master-101 myTKE]# kubectl apply -f ./tke-todo.yaml --kubeconfig ~/.kube/config_tke

deployment.apps/tke-todo-deployment created

[root@master-101 myTKE]#

[root@master-101 myTKE]# cat tke-todo-clb.yaml

apiVersion: v1

kind: Service

metadata:

name: tke-todo-clb

namespace: default

spec:

ports:

- name: tcp-3000-80

port: 80

protocol: TCP

targetPort: 3000

selector:

k8s-app: tke-todo

qcloud-app: tke-todo

type: LoadBalancer

[root@master-101 myTKE]#

[root@master-101 myTKE]# kubectl apply -f ./tke-todo-clb.yaml --kubeconfig ~/.kube/config_tke

service/tke-todo-clb created

[root@master-101 myTKE]#

标签:kubectl,myTKE,app,技术工,202112,master,腾讯,101,root 来源: https://www.cnblogs.com/zhxu/p/15760860.html